Welcome to part two of a blog series based on my latest PluralSight course: Applied Windows Azure. If you missed part one, you can read it here.

Welcome to part two of a blog series based on my latest PluralSight course: Applied Windows Azure. If you missed part one, you can read it here.

Motivation

Azure Worker Roles are executing units that can be used to offload long-running, compute-intensive tasks. You can also think of them as “managed” VMs that execute custom tasks for you. I refer to VMs as “managed” because you don’t have to worry about OS, patches, fault-tolerant setup, etc. Worker roles are backed by a 99.95 uptime SLA. Furthermore, it is possible to dynamically scale worker roles based on load (both horizontally by adding more worker role instances, and vertically by provisioning larger VMs).

Scenarios

Based on the above description, it’s pretty easy to think of a scenario where worker roles can come in very handy. Perhaps you need to process or encode a large number of images or video content, or you need to conduct a complex simulation that requires lots of computational power. Azure Worker Roles are a good fit.

Core Concept

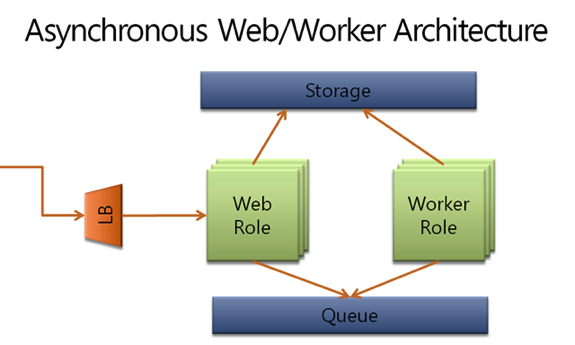

The following diagram depicts the core concept on which you would typically base the use of Azure Worker Roles. You interact with an Azure Worker Role in an asynchronous manner through some queue-based scheme. Although in theory, you could interact with a worker role in a synchronous manner: By hosting, say, a public-facing web service. (It would need to be a self-hosted WCF endpoint, since there no IIS components are installed on a worker role by default).

But generally you want to place the Azure Worker Roles behind a queue, as this will allow you to decouple the worker roles from the incoming requests. In other words, you can scale the number of instances of worker roles up and down based on the desired throughput. (Including letting incoming requests sit in a persistent queue for a while until a certain threshold of tasks to process has been reached.) Since the maximum message size in a queue can be limited (8k for Azure Storage Queue — although it is quite a bit more if you use Azure Service Bus Queue), you typically want to place all the inputs needed for the computation (i.e. large video file that needs to be processed) into an Azure Storage Blob and then subsequently place the link to the Blob within the queued message. Once the computation is complete, a worker role can place the results of the computation back into Azure Storage.

In the diagram below, we have more than one instance of the worker role. So how do we prevent multiple worker roles from going after the same task? Well, fortunately queue implementation (such as Azure Storage Queue) comes with a way to handle such conditions. Once a worker role de-queues a message, the message becomes invisible to other worker roles until it has been processed completely. (Although you can get into a situation where the message reappears in the queue before it has been completely processed. There are ways to handle this, including extending the invisibility period or forcing each worker role to also acquire an exclusive lease before processing a message).

As you can see, not too complicated a pattern. Break your compute-intensive job into smaller tasks and queue them for processing by a variable number of worker roles.

Design Considerations

Here are some design considerations as you look into using Azure Worker Roles:

- How quickly and cost effectively do you want to compute the results? This will determine the number of worker roles you employ. Also related to this is the size of each worker role instance. How big of a VM should power the worker roles? Can you effectively use all the cores available to you?

- What’s the threshold of the queue size when you trigger the provisioning of additional worker roles?

- How should you break the overall task into smaller chunks? Smaller chunks means work is distributed fairly amongst worker role instances. However, there is a cost associated with chunking, i.e. number of read/write operations against Azure Storage, cost of aggregating the results, etc. On the other hand, coarse-grained chunks can lead to a situation where some worker role instances are busy while others idle. Additionally, what if a worker role instance fails just as it is nearing the completion of a computation? (Since the chunk size is large, you could potentially lose a large body of work.)

There are no right or wrong answers to the above questions. You simply need to experiment with various combinations to find the one that works best for you.

Summary

Azure Worker Roles offer a powerful way to develop compute-intensive applications. You can think of them as managed VMs or a managed execution environment that can be used to offload tasks, such as video processing and simulation. You don’t have to worry about OS, patches, fault-tolerant setup, as worker roles are SLA backed. You can also scale them dynamically based on load, either by getting yourself a large VM or provisioning multiple instances. The best approach for interacting with worker roles is through an asynchronous queue-based mechanism. Such a setup allows you to decouple worker roles from the incoming flow of requests.

Update: Just as this blog is being published, Microsoft has announced Windows Azure WebJobs SDK that brings background processing to Windows Azure Web Sites. This announcement offers yet another option for hosting long running jobs. Furthermore, given that Web Jobs are based on a high-density PaaS service (Azure Web Sites), as opposed to a worker role (which is essentially an isolated VM), they may prove to be a more cost-effective way for hosting long-running jobs.