First Things First…What’s a Data Lake?

First Things First…What’s a Data Lake?

If you’re not already familiar with the term, a “data lake” is generally defined as an expansive collection of data that’s held in its original format until needed. Data lakes are repositories of raw data, collected over time, and intended to grow continually. Any data that’s potentially useful for analysis is collected from both inside and outside your organization, and is usually collected as soon as it’s generated. This helps ensure that the data is available and ready for transformation and analysis when needed. Data lakes are central repositories of data that can answer business questions…including questions you haven’t thought of yet.

Now.. What is Azure Data Lake?

Azure Data Lake is actually a pair of services: The first is a repository that provides high-performance access to unlimited amounts of data with an optional hierarchical namespace, thus making that data available for analysis. The second is a service that enables batch analysis of that data. Azure Data Lake Storage provides the high performance and unlimited storage infrastructure to support data collection and analysis, while Azure Data Lake Analytics provides an easy-to-use option for an on-demand, job-based, consumption-priced data analysis engine.

Now that we’ve established what Azure Data Lake is, let’s take a closer look at these two services and where they fit into your cloud ecosystem.

Azure Data Lake Storage (ADLS)

Azure Data Lake Storage (ADLS) is an unlimited scale, HDFS (Hadoop)-based repository with user-based security and a hierarchical data store. Recently, Azure Blob Storage was updated to (among other things) increase capabilities in both scaling and security. Although these updates reduced the differential benefit between ADLS and Blob Storage, that didn’t last long. The improvements to Blob Storage are now the basis for updates to Azure Data Lake Storage. These updates are currently in preview as ADLS Generation 2 and build directly on the new improvements in Blob Storage.

Azure Data Lake Storage (ADLS) is an unlimited scale, HDFS (Hadoop)-based repository with user-based security and a hierarchical data store. Recently, Azure Blob Storage was updated to (among other things) increase capabilities in both scaling and security. Although these updates reduced the differential benefit between ADLS and Blob Storage, that didn’t last long. The improvements to Blob Storage are now the basis for updates to Azure Data Lake Storage. These updates are currently in preview as ADLS Generation 2 and build directly on the new improvements in Blob Storage.

ADLS Gen2 sits directly on top of Blob Storage, meaning your files are stored in Blob Storage and simultaneously available through ADLS. This enables access to all key Blob Storage functionality, including Azure AD based permissions, encryption at rest, data tiering, and lifecycle policies. You can access data stored in ADLS Gen2 via either ADLS (HDFS) or the Blob Storage APIs without moving the data.

Key offerings for Gen2 on top of Blob Storage’s capabilities are the Hadoop-compatible file system (HDFS), hierarchical namespace (folders/metadata), and high-performance access to the large volumes of data required for data analytics. With these updates, ADLS remains the best storage interface in Azure for services running large volume analytic workloads. ADLS makes this performance available to any service that can consume HDFS, including ADLA, Databricks, HD Insight, and more.

Azure Data Lake Analytics (ADLA)

Azure Data Lake Analytics (ADLA) is an on-demand analytics job service. The ADLA service enables execution of analytics jobs at any scale as a Software as a Service (SaaS) offering, eliminating up-front investment in infrastructure or configuration. This analysis is performed using U-SQL, a language that combines the set based syntax of SQL and the power of C#. With no up-front investment and a language that a .NET Developer can easily work with ADLA simplifies startup on the analysis of the terabytes or petabytes of data resting in your Data Lake.

execution of analytics jobs at any scale as a Software as a Service (SaaS) offering, eliminating up-front investment in infrastructure or configuration. This analysis is performed using U-SQL, a language that combines the set based syntax of SQL and the power of C#. With no up-front investment and a language that a .NET Developer can easily work with ADLA simplifies startup on the analysis of the terabytes or petabytes of data resting in your Data Lake.

What’s U-SQL?

U-SQL is a new language that combines the set-based syntax and structures of SQL with the capability and extensibility of C#. However, U-SQL is not ANSI SQL, as the intended purpose of U-SQL goes beyond reading data from a traditional RDBMS. Additional capabilities are required to support actions that are normal for data analysis of large sets both unstructured and structured data, but not for standard SQL.

One example of this is that data in a data lake is often unstructured and likely in the raw format in which it was received. Working with this unstructured data requires defining structure for the data and also often introducing some transformations and enrichment. U-SQL provides a schema-on-read capability that provides structure to data as it is being read and used vs. applying structure to the data as it is received and stored. This unstructured or semi-structured data can then be combined with data in structured data stores like SQL to find answers to questions about your business.

We’ll dive deeply into U-SQL in the future. For now, we’ll take a quick look at a sample U-SQL script. The script below reads in data from a set of CSV log files, counts the number of actions per user, and writes out a CSV file with these results. Although this is a simple example it shows the basic concepts of a U-SQL script. A pattern of read (extract), act (select and transform), and output is one that is repeated again and again in U-SQL scripts, this pattern also maps easily to a typical ETL process.

// Read the user actions data

@userActionsExtract =

EXTRACT userName String,

action String,

date DateTime,

fileDate DateTime

FROM "/raw/userlog/user-actions-{fileDate:yyyy}-{fileDate:MM}-{fileDate:dd}.csv"

USING Extractors.Csv();

// Count the actions for each user where the date in the file name is in July

@totalUserActionCounts =

SELECT userName,

COUNT(action) AS actionCount

FROM @userActionsExtract

WHERE fileDate.Month == DateTime.Now.Month

GROUP BY userName;

// Write the aggregations to an output file

OUTPUT

(

SELECT * FROM @totalUserActionCounts

)

TO "/processed/userlog/user-action-counts-2018-07.csv"

USING Outputters.Csv();Related Services

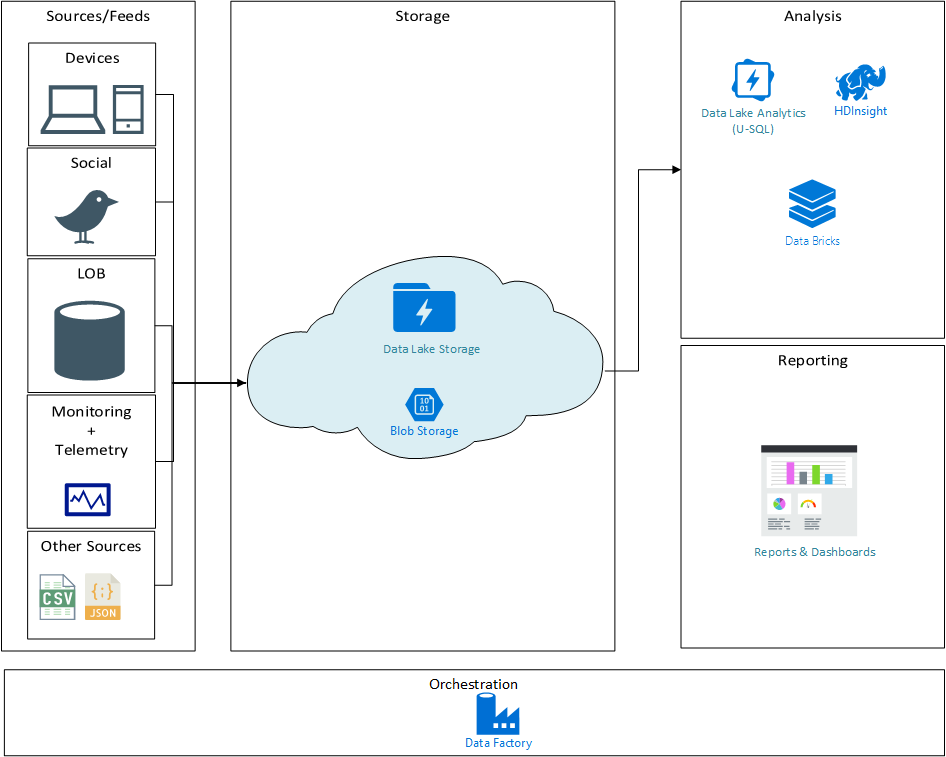

The Azure Data Lake services are only part of a solution for large-scale data collection, storage, and analysis. Here are three questions you might ask about solving these problems. Answers to these questions offer a starting point for further exploration into your data analytics pipelines.

How do you get data into the data lake?

In Azure, the most prominent tool for moving data is Azure Data Factory (ADF). ADF is designed to move large volumes of data from one location to another making it a key component in your effort to collect data into your Data Lake. V2 of Azure Data Factory was recently released. V2 provides an improved UI, trigger-based execution, and Git integration for building data movement pipelines. Another key addition is support for SSIS package execution so you can reuse existing investments in data movement and transformation.

move large volumes of data from one location to another making it a key component in your effort to collect data into your Data Lake. V2 of Azure Data Factory was recently released. V2 provides an improved UI, trigger-based execution, and Git integration for building data movement pipelines. Another key addition is support for SSIS package execution so you can reuse existing investments in data movement and transformation.

How do you perform batch analysis of data in the data lake?

In addition to ADLS, there are other analysis services that are directly applicable to the large-scale batch analysis of unstructured data that resides in your data lake. Two of these services available on Azure are HDInsight and Databricks. Using these other services may make sense if you are already familiar with them and/or they are already part of your analytics platform in Azure. Databricks provides an Apache Spark SaaS offering that allows you to collaborate and run analytics processes on demand. HDInsight provides a greater range of analytics engines including HBase, Spark, Hive, and Kafka. However, HDInsight is provided as a PaaS offering and therefore requires more management and setup.

How do you report on data in the data lake?

Azure Data Lake and the related tools mentioned above provide the ability to analyze your data but are not the generally the right source for reports and dashboards. Once you’ve analyzed your data and identified measures and metrics you might want to see in dashboards and reports, you’ll need to do some additional work. Ideally, data for dashboards and reports will be structured and stored in a service designed to be queried regularly and update the report or dashboard data. The right place for this data will be a destination like SQL Azure, a SQL Azure Data Warehouse, Cosmos DB or your existing BI platform. This is another stage where Azure Data Factory will be key, as it can orchestrate the process to read data, schedule execution of analysis (if needed), structure data, and write the resulting data to your Reporting data store.

Contextual Overview Diagram

Hopefully, you’ve found this blog helpful – from understanding what is Azure Data Lakes, the two services that comprise it, and related services to streamline your data transformation. If you’re interested in learning more about these services, or how AIS could help, view our Data Modernization service offering here.

Interesting in learning more?

- Check out how AIS has used Azure Data Lakes for the U.S. Military

- Azure Data Lake Storage (ADLS)

- Azure Data Lake Storage Generation 2 (ADLS Gen 2)

- Azure Data Lake Analytics (ADLA)

- Azure Data Factory (ADF)

- Getting Started With U-SQL