Generative‑AI proofs of concept are easy, but turning them into business-critical products is hard. While API Management isn’t the only piece of the puzzle, it plays a pivotal role in safely scaling Gen AI-based applications. Below is a practical look at why API Management in the Gen AI Era is important when moving from a “cool demo” to an enterprise-ready Gen AI solution

1. APIs Are the Lifeline of Gen AI

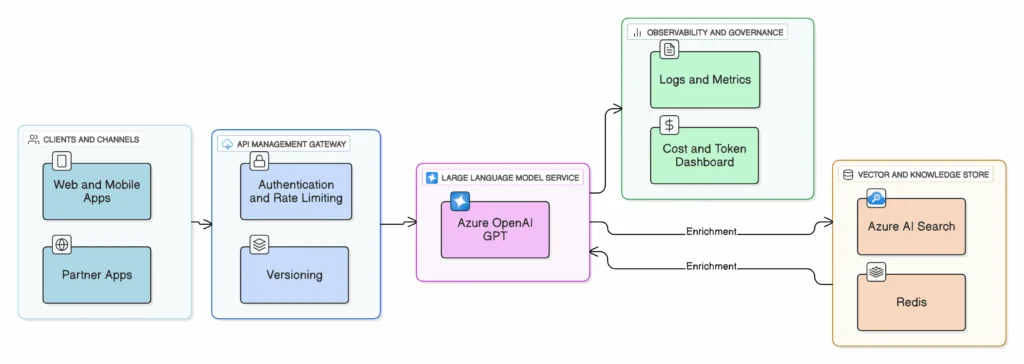

LLM-powered chatbots, document processors, and recommendation engines are often exposed as REST or streaming endpoints. And while performance depends on multiple layers such as model architecture and network design, a well-managed API layer is a practical lever for delivering these systems reliably, securely, and cost-effectively:

- Demand is explosive: 91% of organizations already use or plan to use APIs, and the API‑management market is growing ~17% a year.

- Token budgets are real budgets: Every prompt burns tokens (i.e., money). APIM can implement token‑quota policies to throttle abuse before it’s reflected in your cloud bill does.

- User trust hinges on uptime: Rate limits, circuit breakers, and multi-region gateways shield fragile LLM backends from viral traffic spikes.

A recent AIS project illustrates the point: an agricultural WhatsApp chatbot for a global nonprofit was able to scale from dozens to thousands of users because the LLM sat behind a managed API layer that enforced secure, rate-limited access.

2. Plan First, Code Second

Gen AI projects often stall when the API strategy is an afterthought. A short planning sprint should cover the essentials:

| Planning Pillar | Why It Matters for GenAI |

|---|---|

| API Strategy & Governance | Treat each LLM endpoint as a product with an owner, SLA, and KPIs. A governance board prevents “shadow APIs” that bypass oversight. |

| Design & Documentation | OpenAPI contracts, combined with a self-service developer portal, accelerate adoption and reduce integration defects. |

| Security & Compliance | OAuth 2.0, mTLS, PII masking, and audit trails must be built upfront; retrofitting is painful. |

| Scalability Targets | Define acceptable latency, token throughput, and caching rules before traffic arrives. |

| Lifecycle & Versioning | Plan for /v1, /v2, and deprecation windows so that model upgrades don’t break production apps. |

3. Iterate Quickly, Automate the Boring Stuff

Successful Gen AI programs rarely land fully formed. They evolve in measured iterations, each one adding new capabilities while automation handles the repetitive tasks. APIM is the connective tissue that turns these agile practices into sustainable operations:

- Small, high-value releases –APIM’s built-in revisions and versions let you publish or roll back endpoint changes without redeploying the entire stack, so you can ship weekly and react to feedback in hours instead of days.

- Infrastructure‑as‑Code (IaC) –Terraform and Bicep modules for gateways, products, and policies keep every environment, from dev to prod, identical and traceable, with promotion paths defined in the same repo as your app code.

- Automated policy enforcement –Because APIM policies are files checked into source control, CI/CD pipelines can run linting, security scans, and token‑cost checks before a merge reaches production.

- Progressive rollout features –Canary gateways, header-based routing, and percentage splits in APIM let you expose a new model version or rule to 5 % of traffic first, observe real-world metrics, then dial up to 100 % with a toggle.

Think of APIM automation as the rails that keep every new Gen AI experiment from derailing shared governance, security, and cost controls.

4. Handle Spikes Before They Handle You

LLM workloads are notoriously bursty; a single viral link can generate 10 times the traffic in just minutes. A well-configured APIM layer cushions those shocks with several built-in safety nets:

- Horizontal scale‑out: add gateway units or regions on demand.

- Rate‑limit + token‑limit: cap requests and tokens per minute per client to prevent a single script from melting your capacity.

- Semantic cache (APIM‑routed)—APIM policies can direct repeat prompts to a Redis-backed embeddings cache, short-circuiting duplicate queries and saving latency as well as tokens.

- Circuit‑breaker routing: if the primary LLM is saturated, fail over to a standby model or a cached summary.[BW1][YT2]

These patterns kept a utility-sector customer stable when outage-reporting traffic quadrupled during a storm; APIM absorbed the spike, while the LLM instances caught up.

5. Security Is a Feature, Not a Checkbox

Without hard controls, a generative model is vulnerable.: APIM centralizes those controls and stops abuse before it starts:

- Zero‑trust entry – Entra ID or Okta OAuth tokens gate every call; no token, no access.

- Data‑loss prevention – Policies can strip PII from prompts before they ever reach the model.

- Threat protection – Pair APIM with AzureWAF and Defender for APIs to block OWASP API Top10 exploits in real time.

- Auditable logs – Immutable, centralized logs satisfy NERC‑CIP, GDPR, and internal forensics.

A bank using self-hosted APIM gateways on-prem even routed prompts through an Azure Function that redacted account numbers, meeting stringent data‑residency rules without slowing development.

6. Watch the Dollars (and Tokens)

Yes, APIM adds a line to your Azure bill, but it also:

- Prevents runaway spending by enforcing quotas and token budgets.

- Saves dev time – one gateway means no more bespoke auth, logging, or throttling code in every micro‑service.

- Shrinks risk costs – downtime or data‑leak fines dwarf the price of a managed gateway.

For one insurance client, the Premium tier paid for itself within a year by cutting custom security engineering and accelerating time‑to‑market for five new AI-driven APIs.

7. Measure Everything, Improve Continuously

With APIM analytics, you can answer, in seconds:

- Which API versions are most popular?

- Where are 5xx spikes coming from?

- Which partner key is burning the most tokens?

Tie usage dashboards to OKRs, run monthly “API business reviews,” and treat data as your compass for the next feature sprint.

Key Takeaways

- Gen AI without API governance is a liability.

- Plan, automate, and iterate to avoid costly re‑work.

- Scale and secure at the gateway, not the model—APIM is your shock absorber.

- Use metrics as fuel for continuous improvement and ROI storytelling.

Bottom line: Robust API management is a critical enabler, alongside data quality, MLOps, and responsible AI practices, for turning Gen AI proofs of concept into a sustained competitive advantage. Neglect it, and even the best models can end up underutilized while teams scramble to contain risks.