In this blog post, I would like to introduce and explain Azure’s new service offering for Application Gateway Ingress Controller (AGIC) for Azure Kubernetes Services (AKS). AKS is gaining momentum within the enterprise and Microsoft has been refining the offering to drive adoption. This feature piques my interest because of its capability to improve latency and allow for better application performance within AKS Clusters. I will first discuss the basics of the application gateway Ingress controller. Then, I will dive into the underlying architecture and network components that can provide performance benefits. In addition, I will also explain the improvements and differences this offers over the already existing in-cluster ingress controller for AKS. Finally, I will discuss the new application gateway features that Microsoft is developing to refine the service even further.

In definition, the AGIC is a Kubernetes application that is like Azure’s L7 Application Gateway load balancer by leveraging features such as:

- URL routing

- Cookie-based affinity

- SSL termination or end-to-end SSL

- Support for public, private, hybrid web sites

- Integrated Web Application Firewall (WAF)

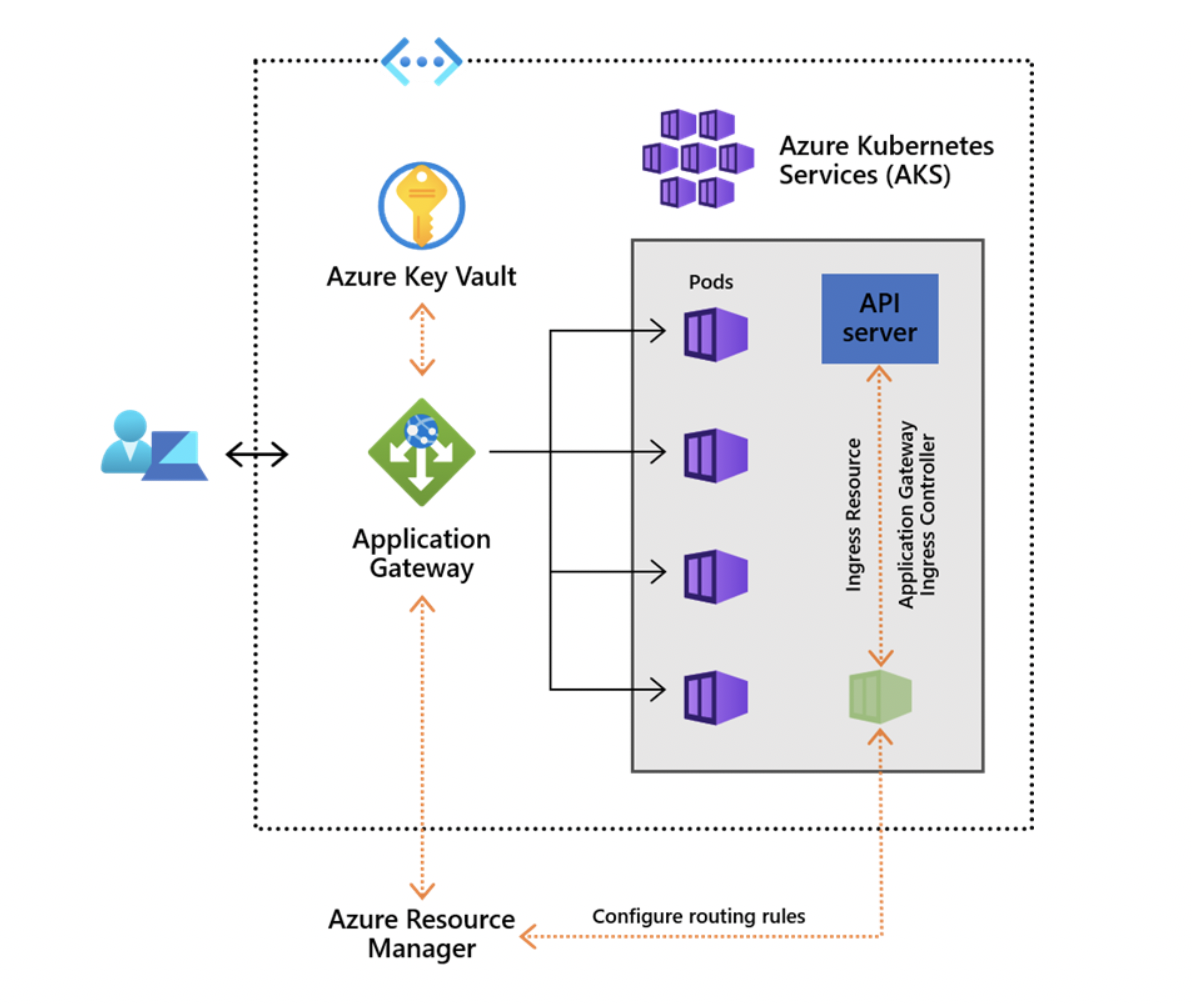

The controller runs in its own pod on your AKS. It monitors and communicates routing changes to your AKS through the Azure Resource Manager to the Application Gateway which allows for uninterrupted service to the AKS no matter the changes. Below is a high-level design of how the AGIC is deployed along with the application gateway and AKS, including the Kubernetes API server.

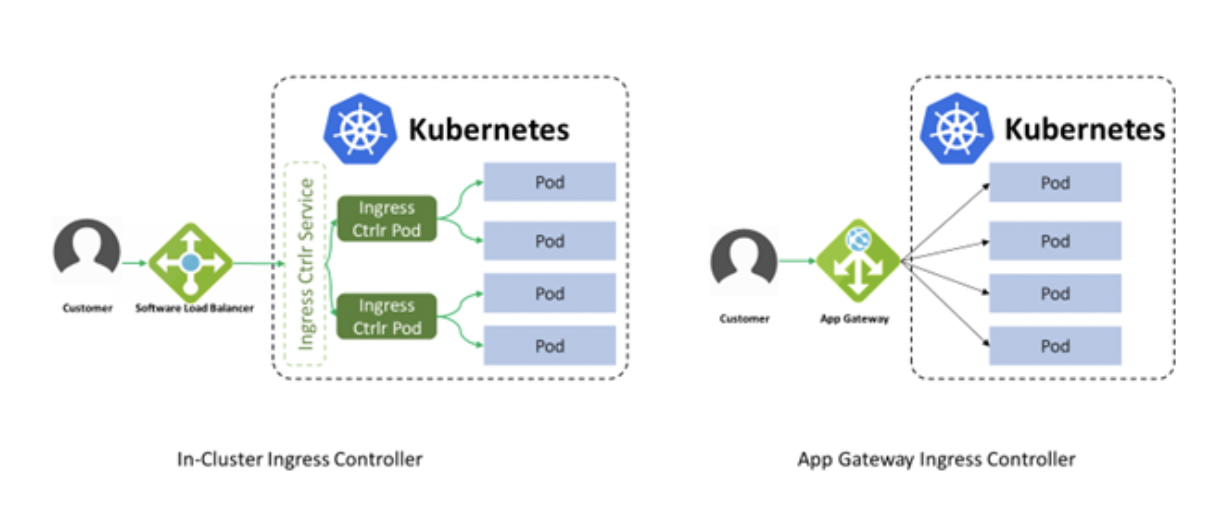

Next, let’s take a closer look at the AGIC and breakdown how it differs from that of the already existing In-Cluster Ingress Controller. The image below shows some distinct differences between the two. The in-cluster load balancer performs all the data path operations leveraging the AKS clusters compute resources, because of this it is competing for the same resources as the application in the cluster.

In terms of networking features, there are 2 different models you can leverage when configuring you AKS. They are Kubenet and Azure CNI:

Kubenet

This is the default configuration for AKS cluster creation, each node receives an IP address from the Azure virtual network subnet. Pods then get an IP address from a logically different address space to that of the Azure virtual network subnet. They also use Network Address Translation (NAT) configuration to allow the Pods to the communication of other resources in the virtual network. If you want to dive deeper into Kubenet this document is very helpful. Overall these are the high-level features of Kubenet:

- Conserves IP address space.

- Uses Kubernetes internal or external load balancer to reach pods from outside of the cluster.

- You must manually manage and maintain user-defined routes (UDRs).

- Maximum of 400 nodes per cluster.

- Azure Container Networking Interface (ACNI)

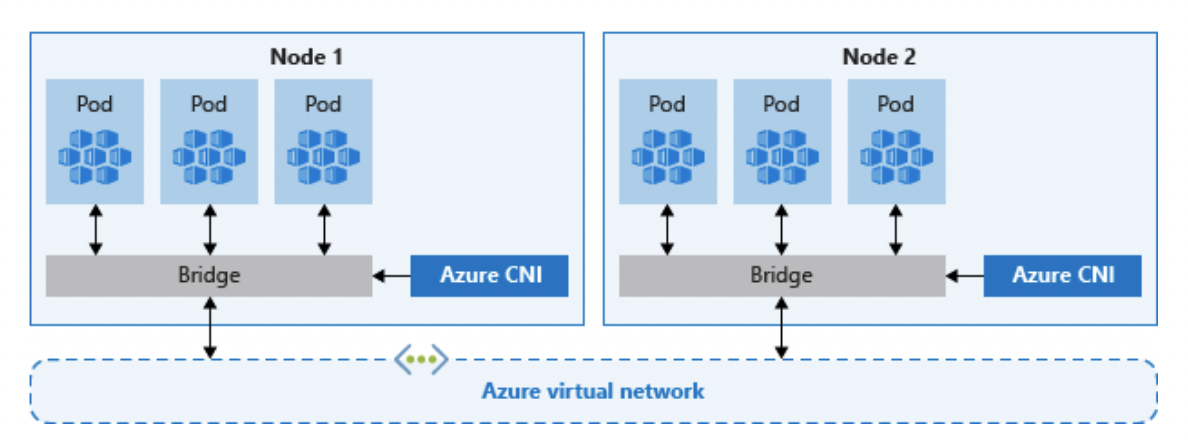

The other networking model is utilizing Azure Container Networking Interface (ACNI). This is how the AGIC works, every pod gets an IP address from their own private subnet and can be accessed directly, because of this these IP addresses need to be unique across your network space and should be planned in advance. Each node has a configuration parameter for the maximum number of pods that it supports. The equivalent number of IP addresses per node is then reserved upfront for that node. This approach requires more planning, as can otherwise lead to IP address exhaustion or the need to rebuild clusters in a larger subnet as your application demands grow.

From the Azure documentation, they also outline the advantages of using ACNI.

Azure CNI

Pods get full virtual network connectivity and can be directly reached via their private IP address from connected networks.

Requires more IP address space.

Performance

Based on the network model of utilizing the ACNI, the Application Gateway can have direct access to the AKS pods. Based on the Microsoft documentation because of this, the AGIC can achieve up to a 50 percent lower network latency versus that of in-cluster ingress controllers. Since Application Gateway is also a managed service it backed by Azure Virtual machine scale sets. This means instead of utilizing the AKS compute resources for data processing like that of the in-cluster ingress controller does the Application Gateway can leverage the Azure backbone instead for things like autoscaling at peak times and will not impact the compute load of you ASK cluster which could impact the performance of your application. Based on Microsoft, they compared the performance between the two services. They set up a web app running 3 nodes with 22 pods per node for each service and created a traffic request to hit the web apps. In their findings, under heavy load, the In-cluster ingress controller had approximately 48 percent higher network latency per request compared to the AGIC.

Conclusion

Overall, the AGIC offers a lot of benefits for businesses and organizations leveraging AKS from improving network performance to handling the on the go changes to your cluster configuration when they are made. Microsoft is also building from this offering by adding more features to this service, such as using certificates stored on Application Gateway, mutual TLS authentication, gRPC, and HTTP/2. I hope you found this brief post on Application Gateway Ingress-Controller helpful and useful for future clients. If you want to see Microsoft’s whole article on AGIC click here. You can also check out the Microsoft Ignite video explaining more on the advancements of Application Gateway.