Cosmos DB is a cloud-native, geo-distributed, highly scalable schema-less database provided by Microsoft Azure. Cosmos DB achieves cloud-scale horizontal scalability by utilizing a partitioning scheme. Choosing how to partition data stored in Cosmos DB can be a tough decision and directly impacts how well Cosmos DB will work as a solution. Let’s discuss some ideal use cases for Cosmos DB to understand better. I’d like to discuss two usage patterns; the first is storing user information. The second use case will deal with storing device telemetry in an internet-of-things scenario.

User Information Use Case

How to store user information in Cosmos DB

Cosmos DB is an excellent solution for storing user information. A few examples would be saving a user’s shopping cart or storing a user’s wishlist. For either of these scenarios, we can create a single schema-less document in Cosmos DB. That single document would contain the contents of their shopping cart or all the items on their wishlist. When determining the partition key for the Cosmos DB collection, we would choose the identity of the user.

Now, when integrating Cosmos DB with the website, the user will have first logged in. Once logged in, we will have the user identity. Now, anytime we need to display the shopping cart or wishlist, it is a document read. This document read is to a well-known partition in Cosmos DB, the fastest and cheapest operation in Cosmos DB. Similarly, updates are straight-forward with a single document write to a well-known partition in Cosmos DB. If users wished to share their wishlist, they would typically do that with a link, which could embed the user identity.

Advantages of storing user information in Cosmos DB

Now, let’s discuss some of the advantages of using Cosmos DB for this scenario. In the above scenario, we are always performing a single document reads, or a separate document writes. Both of those scenarios have service level agreements (SLAs) provided by the Cosmos DB service. Document reads and writes are guaranteed to finish in 10 milliseconds or less. So, by using Cosmos DB, we have guaranteed performance.

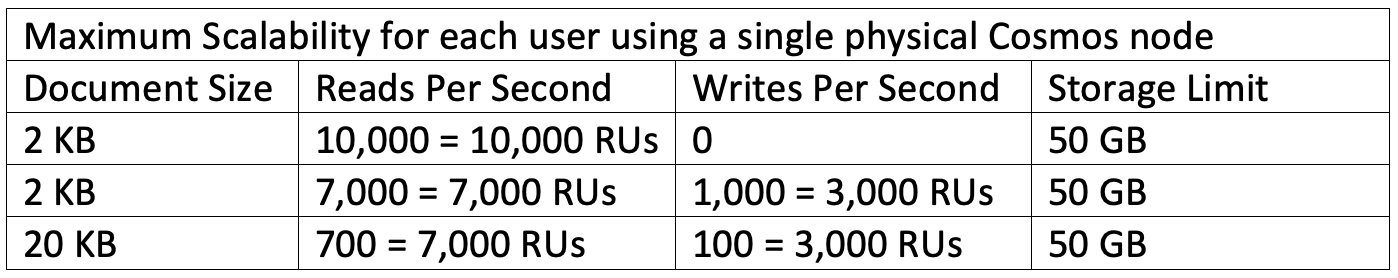

Also, when building a website storing user information, we need to design for high-load events. Cosmos DB achieves its scalability by creating additional physical nodes as requested and then mapping those logical partitions onto physical nodes. In our case, a logical partition is simply the identity of a single user. So, if we want to max out our scaling potential, Cosmos DB would create a single physical node for every individual user of our system. A single RU (request unit) is defined as a document read of 2 KB document. Writing a document typically consumes 2-3 times the RUs (request units) that a document read uses (this depends on the consistency and indexing settings). A single Cosmos DB physical node can support up 10,000 RUs (request units) per second. Based upon this knowledge, here are some example workloads that Cosmos DB could support should you max out the scaling so that each user ended up with their physical node.

Hopefully, as you can see, Cosmos DB is highly scalable when choosing the right partition strategy. With Cosmos DB, we also get the ability to easily geo-replicate our entire database. You need to enable the desired Azure regions, and Cosmos DB will automatically deal with replicating the data and keeping it consistent. For our scenario, this provides additional benefits because we can deploy our web service in each different continent. Each instance of the web service could then automatically locate the closest Cosmos DB region of our database to ensure high performance. So, we can now achieve high performance, while also guaranteeing high availability, but still only manage a single Cosmos database.

Finally, when provisioning Cosmos DB, you pay for a given number of RUs (request units per second). The provisioned RUs can be adjusted as needed to either increase scalability or lower cost. So, we can scale Cosmos DB as necessary for our user information scenario to ensure we only pay for the resources that we need at any given time.

Disadvantages of storing user information in Cosmos DB

Cosmos DB provides the ability to query documents using a SQL like a syntax. This capability can either query a single partition or query all the partitions. In general, executing queries do NOT provide a response time SLA (service level agreement), and they typically cost many more RUs (request units). Now, the advantage of storing user-level information, especially for a web platform or e-commerce platform, is that it would never be performing these types of queries. You would always be showing the user their information using their own user identity. Hopefully, I’ve explained that this use case avoids one of the problem areas with Cosmos DB.

Device Telemetry Use Case

How to store device telemetry in Cosmos DB

Cosmos DB is an excellent solution for storing device telemetry. There might be a manufacturing center with several hardware devices that generate telemetry as they are used. In this case, each piece of generated telemetry could be a separate document in Cosmos DB. Because Cosmos DB is schema-less, each part of telemetry could have an entirely different schema from any other piece of telemetry. However, they could also be stored in the same document collection in Cosmos DB. For this solution, the ideal partition strategy would be to use the unique identifier of each device. With this storage solution, each device can stream telemetry to Cosmos DB, and then a dashboard could show the telemetry received from each device.

Advantages of storing device telemetry in Cosmos DB

The advantages of using Cosmos DB for this scenario are very similar to those advantages for the user information scenario. Specifically, I will mention the guaranteed latency SLA (service level agreement) of 10 milliseconds or less is very useful for this scenario to ensure that the device telemetry is received promptly. Also, providing the required request units ahead of time and scale them elasticity up and down is very useful for this scenario. For device telemetry, it likely that not all telemetry needs to be stored for all time. Cosmos DB nicely supports this scenario by allowing each document to have a time to live (TTL) property set on the document. This property indicates how many seconds the document should remain in the system since the last update to the document. This feature would work nicely to ensure that the system only retained the last week or the previous 30 days of telemetry. Finally, the advantage of schema-less storage is incredibly useful for this scenario as well.

Disadvantages of storing device telemetry in Cosmos DB

Like the user information scenario, the primary disadvantage of Cosmos DB for this solution would be querying documents, individually querying across devices. Now, instead of querying telemetry directly from Cosmos DB, one could either use the Analytical data storage feature of Cosmos DB or the change feed. The Analytical data storage feature is a feature of Cosmos DB that will replicate the data from Cosmos DB into a separate row-based data storage system using Parquet files. Once the data is replicated, it can then be directly connected to Azure Synapse and queried using either Apache Spark or massively parallel SQL engine. Both queries compute engines are designed for big data querying scenarios and have no effect on the RUs (request units) required to manipulate documents in the Cosmos DB transactional store. I’ll discuss the Cosmos change feed in the next section.

Event sourcing and Cosmos change feed

Cosmos DB provides a built-in change history feature known as the change feed. The Cosmos DB change feed tracks changes to the documents in a collection over time. Any client can query the change feed at any moment and from any given historical point in the collection. Each client can then track their position in the change feed to know if new document changes have arrived in the system.

The change feed feature is an incredibly useful feature to replicate data from Cosmos DB into another storage system as needed in near real-time. Specifically, as mentioned, complex querying across documents is not ideal in Cosmos, but with the change feed, you can easily replicate the data to any other third-party data platform.

However, the most compelling use case of the change feed is to pair it with event sourcing. For both of our use cases, instead of storing the current state of the data (e.g., the current contents of the user shopping cart or wishlist), save the action that the user performed as a document (e.g., added an item to the wishlist, removed an item from the wishlist). The same would apply to the device telemetry scenario. One could then use the change feed to process the system events in near real-time and then calculate the desired aggregate (e.g., the user’s current wishlist) and then store that in Cosmos DB as an aggregate document or in another data storage system. By storing events and processing them in order using the change feed, you can then enable and trigger other scenarios. Such as answering if an ad campaign resulted in users adding the item to their shopping cart or wishlist and how quickly that occurred from when they interacted with the ad campaign. Cosmos DB specifically enables this scenario because of the schema-less support, and each event can be stored as a document with a different schema. Still, all the functions can be stored in a single collection.

Summary

Finally, a summary of the advantages of storing user information and device telemetry in Cosmos DB.

- Partition Strategy is very clear (user identity or device id)

- Only uses document reads and document writes (most efficient operations in Cosmos DB)

- Guaranteed SLAs on latency

- Easy to predict RU (request unit) usage

- Support for complex schema-less documents

- Elastic scalability (provisioned request units per second) can be adjusted as needed. The partitioning strategy will result in maximum scalability of a single physical node for a given user or device

- Ability to scale Cosmos DB down to save costs or up to deal with high load

- Automatic geo-replication and use of the nearest region to reduce round-trip latency

- Ability to auto-trim device telemetry by utilizing the time-to-live (TTL) feature on a document

- Ability to perform event sourcing and near real-time reactions to data changes using the Change Feed feature of Cosmos DB

Hopefully, this article highlighted some of the ideal usage patterns for Cosmos DB and helped you better understand how to utilize this cloud-scale, elastic, geo-replicated schema-less database.