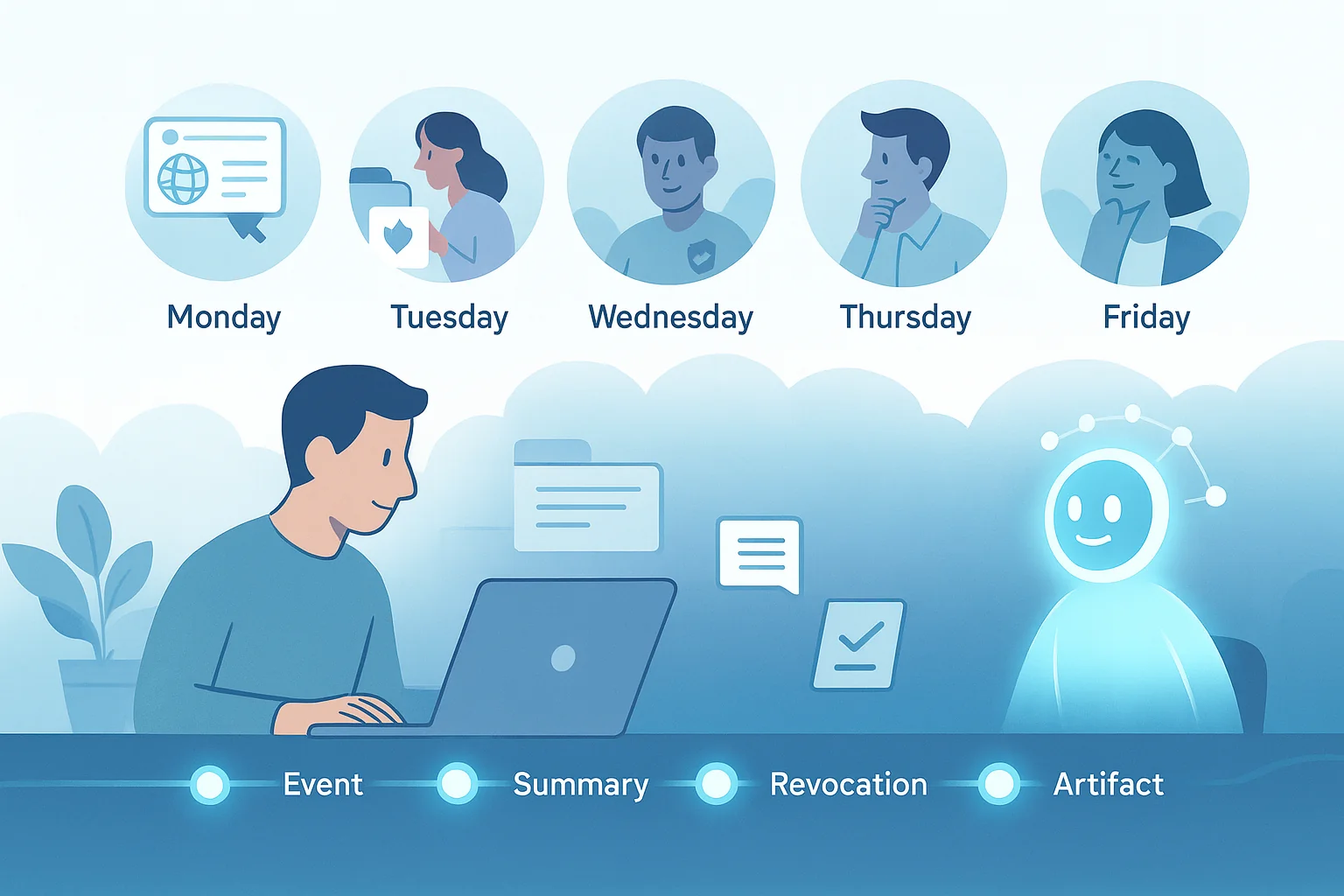

Picture a “research-to-draft” agent asked to produce a policy brief by Friday. On Monday it searches the web and your knowledge base, extracts citations, and drafts an outline. On Tuesday a teammate drops new documents in SharePoint and tweaks the goal; on Wednesday Legal flags a source that must be removed; on Thursday a manager asks, “Why did the agent recommend this?” By Friday, the agent’s point of reference has rolled past key facts, tools have returned inconsistent results, and no one can see a clean trail of what changed, when, and why. This agent needs a reliable memory!

What’s changed is the unit of work. We’ve shifted from short chat sessions to goal-oriented, multi-step workflows that span hours or days, cross multiple tools and data sets, and can also involve multiple human interactions. In that world, memory is now part of the agent’s critical product surface, not an afterthought. And for AI agents, memory just means the collection of organized data it can rely on to meet the demands of this new unit of work.

This post is meant to provide a few opinionated thoughts for designing that memory. You shouldn’t think of this guidance as hard rules for every case, but a reference point we’ve come to use as a good starting point when we are building agentic workflows. This guidance should be applicable whether you are a developer designing and implementing the core components of a custom AI agent, or a knowledge worker that is using one of the many amazing agentic platforms now available to us. In both cases, a good understanding of the types of data needed to build a reliable memory can give your agent the best chance of producing valuable results.

Hopefully, you’ll walk away with:

- A taxonomy that describes the kind of memory that’s important to AI agents.

- Design considerations to decide what to keep, how long it should live, and how it should be stored.

- Questions to answer before building anything.

- Reference patterns you can implement.

Memory Taxonomy

Designing agent memory isn’t typically one thing. It’s a set of purpose-built data sets with different lifecycles, stores, and permissions. Each type plays a different job in the workflow; some keep the current step on track, others capture what happened for audit, and others preserve reusable knowledge or enforce procedures and preferences. The taxonomy below can provide a reference for deciding what to keep, where it lives, how long it lives, and who can touch it.

What it is: The agent’s scratchpad. Plans, partial thoughts, tool arguments, and short-lived summaries that keep the current step coherent.

Lifespan: Minutes to hours.

Type of Store: Fast in-memory cache with encryption at rest.

Good Practices: Time to Live (TTL) 30–120 minutes; summarize content with citations; redact secrets before any persistent write. Auto-summarize at step boundaries

What it is: An ordered timeline of what happened. Observations, tool calls, decisions, and outcomes. Think of it as the “flight recorder” you use to replay, explain, or undo work later.

Lifespan: Months to years, based on operations and audit or compliance needs.

Type of Store: A write-once (append-only) activity log, with larger files saved in a secure document store.

Good Practices: Record one event per step or tool call; include who did it, when, with which tool or model, and links to sources or files. Create periodic summaries to keep the log compact, and use clear IDs so events tie back to a task.

What it is: Durable knowledge the agent can reuse. Facts, FAQs, profiles, SOPs, and key knowledge is kept so it’s easy to find and cite.

Lifespan: Months to years, with regular review for freshness.

Type of Store: A searchable knowledge index that typically supports a mix of keyword and “similar meaning” search (Example: vector datastore) plus a document library.

Good Practices: Curate sources; split long docs into small, titled chunks with tags; keep author/date/source links; filter or redact sensitive fields before indexing; remove duplicates; prefer recent and authoritative material.

What it is: How-to knowledge for getting things done. Tool capabilities, input and output formats, step-by-step playbooks, and reusable “skills.”

Lifespan: Versioned and updated as tools change.

Type of Store: A version-controlled repository for skills and schemas (like a code repo), with a small runtime cache so agents can quickly discover what’s available.

Good Practices: Keep procedures small and composable; define clear inputs and outputs; add basic tests; review and approve changes; note which version ran during execution; deprecate old versions with a cut-over date.

What it is: User and organization settings, constraints, and consent. Things like preferred output format, data-sharing rules, redaction policies, and allowable regions.

Lifespan: Varies by purpose; set review and expiry dates where appropriate.

Type of Store: A central settings store (not the semantic knowledge index).

Good Practices: Record why each setting exists and who approved it; apply least-privilege access; make revocations take effect immediately; separate settings by user/team/org; log changes for audit; encrypt sensitive items.

If it affects trust, make it Episodic. Reusable knowledge stays in Semantic Memory. If it’s how to act, make it Procedural. If it’s who or what is allowed, keep it in Preference. Everything else stays in Working Memory and expires fast.

Questions to Answer Before Building Anything

Are your agents solving quick, self-contained problems that finish in minutes, or do they run for hours and pick up again tomorrow?

Before you pick a database or wire up an index, start with the expected task horizon. Short horizons rarely need more than ephemeral working memory plus stateless retrieval from a curated knowledge source. Long-horizon work is different: plans change, tools fail, and people step in. That calls for a small working buffer for the active step and a durable, append-only event log that checkpoints what happened so you can resume, retry, and explain without dragging a massive context window around.

Who needs to read and write each type of memory. Is it just one user, a shared team, or the whole organization?

Treat scope as a first-class design choice. Personal work stays user-scoped and isolated; but the moment collaboration appears, you should define a shared space with clear promotion rules (what becomes team-visible and who approves it). Enforce permissions before retrieval so sensitive content never leaves the store by mistake. This single decision goes a long way to prevent leakage and keeps “private vs. shared” behavior predictable.

Will you need to reconstruct decisions later for compliance, forensics, or simply to explain an outcome?

If the answer is yes, plan for an audit trail you can actually use. Capture concise, per-step breadcrumbs; what happened, when, with which tool or model, and links to sources or files. Treat the event log as a product: append by default, summarize on a schedule, and set retention up front so “why did this happen?” is answerable without spelunking through chat transcripts.

What budget are you operating within? Tokens, storage, and latency both on average and at peak?

Memory is where costs quietly accumulate: capturing, summarizing, embedding, and retrieving. Put a simple budget on each phase of a task and prefer summarizing at natural boundaries over dragging giant contexts around through a longer running process. Cache repeatable tool calls and monitor index growth so you don’t re-embed the world. Aim for clear unit economics: what does one more memory operation cost and what value does it unlock?

Will the agent handle sensitive data or face regional restrictions?

Sensitivity dictates where memory can live and how it’s stored. Keep confidential raw text out of semantic stores; store references and redacted summaries instead. Isolate by tenant or region and encrypt by default.

Reference Architecture Patterns

Below are five patterns I reach for most often. They’re composable so you can start with one and layer others as needs grow. Each describes what it is, when to use it, and the design moves that make it work in production.

When in doubt, start here. Pull context on demand from a curated knowledge base using Retrieval-Augmented Generation (RAG); keep session state ephemeral.

Use when: Read-heavy tasks, minimal personalization, or strict compliance zones where you want zero persistent writes.

Why it Works: You get consistent, auditable answers without managing long-lived state; quality hinges on curated sources and provenance.

Anti-patterns: “Index the whole drive” without curation; embedding sensitive raw text; relying only on dense search with no keyword/metadata filters; missing source citations.

For multi-step work that spans hours or days, add a small scratchpad for the current step and append concise events (what happened, when, with which tool) to a write-once activity log; compact to summaries on a schedule.

Use when: Multi-step work spanning hours/days, handoffs, retries, rollbacks, or requirements to explain actions exist.

Why it works: The active context stays lightweight, while the event trail makes resuming, debugging, and “why did this happen?” straightforward.

Anti-patterns: Storing raw secrets or full chat transcripts; no task/step IDs; never compacting the log; persisting raw chain-of-thought.

Make reusable knowledge a first-class citizen. Store facts and artifacts as chunks with embeddings, backed by sparse search and metadata filters (owner, authority, recency). For entity-centric reasoning, add graph links (e.g., Person↔Project↔Policy) so the agent can traverse relationships, not just retrieve text.

Use when: Knowledge re-use across tasks, entity-centric reasoning, deduping near-duplicates, and clear provenance.

Why it works: Hybrid retrieval boosts both precision and recall; provenance and tags keep results trustworthy and current.

Anti-patterns: Giant, untagged chunks; stale or duplicative content; embedding confidential raw text; no author/date/source links.

Treat each integration like a mini-system: cache results by arguments, track rate-limits and backoff, and version the tool’s schema. The agent stays fast and predictable even when APIs are slow or flaky.

Use when: Heavy API usage, flaky/slow integrations, or tight cost/latency targets.

Why it works: Cuts repeat calls and token spend, stabilizes performance, and makes failures predictable without polluting global memory.

Anti-patterns: Global caches shared across users/tenants; lax eviction leading to stale answers; un-versioned tool contracts; burying tool quirks in prompts.

A role-based space where agents and humans publish curated summaries, decisions, and reusable artifacts, with clear promotion rules from personal -> team -> organization.

Use when: Cross-functional workflows, handoffs, and when you want organizations to learn from each run instead of starting from zero.

Why it works: Captures institutional knowledge with guardrails (ownership, review, and traceable changes) so reuse is safe and reliable.

Anti-patterns: Dumping everything into a shared bucket; no owners/review; mixing private and shared data; skipping policy checks on reads/writes.

How to Compose

Start with Stateless + RAG to de-risk and ship. When tasks span hours or days, add a small Working-Memory Buffer and an Episodic Log for continuity and replay. As knowledge repeats, layer in a Semantic Hub. If latency or cost comes from APIs, add Tool-Scoped Memory. When people or agents begin to collaborate, promote curated artifacts into Shared Team Memory with review and policy. Architectural complexity should only grow when the work demands it.

Closing Thoughts

Memory is no longer a nice-to-have. It’s the backbone that has the potential to turn agents from clever demos into dependable coworkers. As work shifts from single-turn chats to multi-step, tool-using workflows, continuity, auditability, and revocability move from “engineering details” to product requirements. When building your agents, treat memory like part of your architecture, not residue from a conversation: design it, test it, and put it under change control.

The good news is that you don’t have to do it all at once. Start stateless and add only the memory you need. If you want a safe way to rehearse those choices before production, see Knowledge Systems and Synthetic Data for using synthetic datasets to pressure-test retrieval and memory. Measure outcomes and cost as you go so memory pays for itself in speed, quality, and confidence.

References

- Endel Tulving. “Episodic and Semantic Memory.” 1972. (alicekim.ca)

- David L. Greenberg & Mieke Verfaellie. “Interdependence of episodic and semantic memory.” Journal of the International Neuropsychological Society, 2010. (PMC)

- Louis Renoult & Michael D. Rugg. “An historical perspective on Endel Tulving’s episodic–semantic distinction.” Neuropsychologia, 2020. (ScienceDirect)

- Charles Packer, Sarah Wooders, Kevin Lin, Vivian Fang, Shishir G. Patil, Ion Stoica, Joseph E. Gonzalez. “MemGPT: Towards LLMs as Operating Systems.” arXiv (v2), February 2024. (arXiv)

- LangChain. “memory — LangChain documentation.” (developer docs). (LangChain)

- Patrick Lewis, Ethan Perez, Aleksandra Piktus, Fabio Petroni, Vladimir Karpukhin, Naman Goyal, Heinrich Küttler, Mike Lewis, Wen-tau Yih, Tim Rocktäschel, Sebastian Riedel, Douwe Kiela. “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.” arXiv, 2020. (arXiv)

- Patrick Lewis et al. “Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks.” NeurIPS 2020 Proceedings. (proceedings.neurips.cc)

- Model Context Protocol. “What is the Model Context Protocol (MCP)?” (official docs). (Model Context Protocol)

- Model Context Protocol. “MCP Docs.” (documentation site). (MCP Protocol)

- Sikuan Yan, Xiufeng Yang, Zuchao Huang, Ercong Nie, Zifeng Ding, Zonggen Li, Xiaowen Ma, Hinrich Schütze, Volker Tresp, Yunpu Ma. “Memory-R1: Enhancing Large Language Model Agents to Manage and Utilize Memories via Reinforcement Learning.” arXiv (v1), August 2025. (arXiv)

- OpenAI. (2025). GPT-5: OpenAI’s latest multimodal model.