Last year, most knowledge workers treated generative AI like a smart search box, using it to generate polished responses or creative images through one-off prompts. Today, more than 35 percent of early adopter enterprises report having at least one autonomous agent in production. These agents are capable of planning tasks, calling APIs, and delivering completed work with minimal human input (Gartner, 2025).¹

This rapid shift from single-turn assistants to goal-driven, tool-using agents was anticipated in AIS’s whitepaper, Embrace the Future of Knowledge Work with Generative AI Literacy. In that paper, we explained how building awareness of AI fundamentals and developing readiness through hands-on workflows are critical steps in adopting more advanced AI capabilities.

That next chapter is now coming into focus: agents that can manage entire workflows rather than only generating a first draft. This post shows how agentic AI is moving from theory to practice and what it means for knowledge workers who want to move from prompts to outcomes.

From Assistants to Agents

Early generative AI tools functioned like highly capable assistants. You gave a prompt or series of prompts, and the system returned a response. Agents, however, represent a meaningful evolution. They do more than respond. They take initiative, sequence their actions, and use external tools to get things done.

Assistants improve individual efficiency, but Agents rewire entire workflows. For businesses, this means less time coordinating handoffs and more time making decisions and delivering value. Agents have the potential to unlock automation that reflects how people actually work.

Traits of an Agent

- Goal Oriented: Agents are given an end objective, not just a prompt or question. They pursue outcomes.

- Multi Step: They break complex tasks into steps and reason through them sequentially.

- Use of Tools: Agents interact with APIs, databases, and applications to take meaningful action.

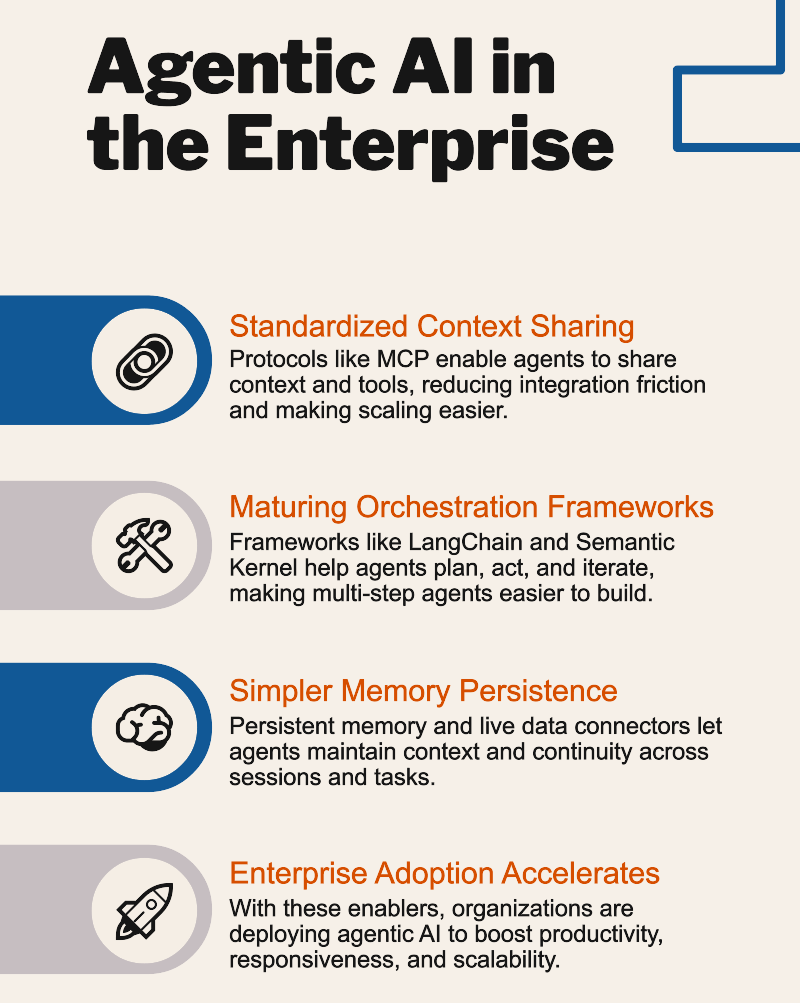

Why Now? Technical Enablers Accelerating Agentic AI

Agentic AI is no longer just a concept. It is being actively tested and deployed in a growing number of enterprise settings. While many forces are driving this trend, including business pressure to increase productivity and improve responsiveness, several key technical developments are making it easier to build and scale generative AI agents.

Standardized Context Sharing and Tool Integration

One of the most impactful enablers is the emergence of standardized protocols for context sharing and tool integration. The Model Context Protocol (MCP) is an open specification that gives agents a common way to package and pass context, tool definitions, and grounding data between language models, vector databases, and orchestration layers. This reduces custom glue code and makes integrations more predictable.

By aligning the way these components communicate, MCP reduces the friction that once made agent development difficult to scale. Within six months of its release, MCP had attracted over 3,500 GitHub stars and contributions from more than 120 organizations, signaling growing cross-industry support. MCP has been positioned as an open, cross-vendor standard for connecting models to tools and data, with public endorsements from major ecosystem players.

Maturing Orchestration Frameworks

A second enabler is the rapid evolution of orchestration frameworks that streamline how agents plan, act, and iterate. Frameworks like LangChain, Microsoft Semantic Kernel, CrewAI, AutoGen, and the Open Agent Architecture are converging on similar execution patterns, lowering the barrier for teams to experiment with multi-step agents. These frameworks offer reusable abstractions for goal decomposition, tool selection, and memory integration. LangChain alone surpassed two million monthly downloads by mid-2025, with more than 70,000 GitHub stars, reflecting how widely these orchestration tools are being embraced.

Simpler Memory Persistence

A third technical enabler is the new ease of giving agents durable memory. What used to require custom session stores, brittle context stitching, and homegrown summarization loops can now be set up with a few standard building blocks. For example, OpenAI’s Responses API provides stateful threads that preserve conversation context across sessions, while services such as DeepLake and approaches like MemGPT offer longer-term knowledge and hybrid memory patterns. These services make continuity the default rather than an advanced feature.

For builders, the pattern is now straightforward. Keep conversational state in threads, keep facts and documents in a knowledge repository, and refresh context with retrieval when the task resumes. Many frameworks handle automatic recap and memory compaction, so prompts stay within context limits while preserving the essentials.

Anatomy of an Enterprise Agent

Enterprise agents follow a repeatable lifecycle: capture a goal, plan the steps, assemble context, take tool-based actions, validate intermediate results, update memory, and hand off a final output for review or execution. The notes below show where content chunking, embedding content, vector search, and RAG power the context step.

Goal Capture

Every agent begins with a clear objective. This can arrive in the form of a natural-language prompt, a service ticket, or a structured API call. It includes not only the task itself, but also constraints, deadlines, and output expectations. For example, “Generate a compliance summary of this policy change and send it to the legal team by noon.” Human owners or designated systems play a key role here by validating that the goal is appropriate for automation, especially in high-risk domains.

Planning and Decomposition

Once the agent understands the goal, it begins decomposing it into sub-tasks. This might involve identifying the necessary tools, sequencing steps, and determining how outputs from one step will inform the next. Orchestration frameworks like LangChain, Microsoft Semantic Kernel, and AutoGen are designed to support this planning logic. Governance policies define which tools are available to the agent and which actions require human sign-off.

Context Construction

With a plan in place, the agent begins collecting the knowledge needed to ground its actions. This is where techniques like chunking, embeddings, and retrieval-augmented generation (RAG) come into play. Documents are segmented into coherent chunks, converted to embeddings, and stored in a knowledge repository. At run time, the agent retrieves the most relevant chunks to ground its reasoning and actions. MCP carries the structured context and tool metadata to the model.

Execution and Tool Calls

The agent proceeds to execute the planned tasks, often by interacting with external systems such as databases, APIs, workflow engines, or document generation tools. It may trigger a calendar event, call a financial system, or write a draft email. Tool access is typically governed through wrappers or plugins provided by orchestration frameworks. Security controls such as authentication scopes and rate limits ensure that the agent operates within approved boundaries.

Intermediate Validation

Before continuing to the next step or delivering results, the agent performs intermediate validation. This may involve checking for hallucinations, enforcing policy constraints, or ensuring outputs match predefined schemas. Automated validators can catch common issues quickly, while human reviewers may be brought in for more sensitive tasks. This layered approach provides both speed and oversight, building user trust and organizational confidence.

Memory and State Update

As the agent works through a task, it stores key outputs, decisions, and error states in persistent memory. This allows future tasks to build on prior context without starting from scratch. Technologies like OpenAI Responses API with persistent threads, DeepLake, and MemGPT are being adopted to support long-running context awareness and information retention.

Result Assembly and Handoff

Once the goal is achieved, the agent compiles its final output and delivers it to a human reviewer or downstream system. This could be a completed report, an updated system record, or a draft email ready for approval. At this stage, human oversight plays a final role in reviewing and validating the agent’s work before it is shared externally or used to drive decisions.

Enterprise Agent Scenarios

Below are four scenarios where agents move beyond single-turn assistance and deliver completed outcomes, and we’ve seen some version of each of these in action today. Each example includes how it works, where Model Context Protocol (MCP), chunking, embeddings, and retrieval fit, and what to measure.

Meeting Debrief to Actions

How it works: The agent ingests a set of meeting transcripts, uses chunking to segment by speaker and topic, retrieves relevant policies or project context through a vector database, and produces a concise summary. It extracts decisions and action items, creates tasks in the team’s planner, drafts a recap email, and proposes dates for the next checkpoint. MCP provides a consistent way to pass tool schemas for calendar, email, and task systems, as well as the retrieved context that grounds the summary.

What to measure: Minutes saved per meeting, percentage of action items captured, time from meeting end to first follow-up, reduction in missed follow-ups. Example: “Reduce time from meeting end to first follow-up to under ten minutes”.

RFP or Proposal Assembly

How it works: The agent parses the RFP, identifies required sections, and queries a vector store of past responses and approved boilerplate. It drafts tailored content, cites sources from the knowledge base, and routes the package to the right reviewers. MCP standardizes access to file stores, CRM data, and document templates, and carries retrieval context so the draft remains grounded.

What to measure: Time to first draft, reuse rate of approved content, reviewer revision effort, win rate lift on comparable opportunities. Example: “First-draft cycle time cut by 40 percent.”

Client or Employee Onboarding

How it works: The agent reads the intake form, validates required fields, and orchestrates account creation, access requests, and orientation scheduling across HR, IT, and facilities. It generates a personalized starter kit and FAQ from the knowledge base using chunking and embeddings to select the most relevant materials. MCP enables a single pattern for tool calls across identity systems, ticketing, and calendars.

What to measure: Lead time from intake to first productive day, number of handoffs, ticket reopen rate, satisfaction score from the new hire or new client. Example: “New hire ready for day-one access in 24 hours.”

Finance Operations close support

How it works: The agent retrieves statements and invoices, reconciles line items against purchase orders, flags anomalies, and drafts suggested accrual entries with clear explanation. Retrieval augmented generation grounds each suggestion in source documents. MCP transports the context packet and the schema for the finance system so the agent can post a proposed journal entry for human approval.

What to measure: Days to close, percentage of reconciliations completed by the agent, error rate detected in review, cost per close cycle. Example: “One day reduction in monthly close.”

And just to reinforce the point, these scenarios are more realistic than ever because:

- Standardized context exchange through standards like MCP reduces custom glue code.

- Maturing orchestration frameworks make planning and tool selection repeatable.

- Simplified implementation of persistent threads and retrieval connectors allow agents to stay with a workflow for hours or days.

Closing Thoughts

Agentic AI is moving from concept to practice. The shift from single-turn assistants to goal-driven, tool-using agents is now visible in real workflows. Three technical enablers are making this practical at scale: shared context and tool protocols such as the Model Context Protocol, maturing orchestration frameworks, and simpler persistent memory with live data connectors. When these pieces work together, agents move beyond drafting to dependable follow through.

The opportunity for knowledge work is straightforward. Give agents clear goals, ground them in your organization’s content, and constrain their actions with simple rules and review points. Start where repetitive effort meets clear business value, measure outcomes, and build from there.

What to do Next

- Identify one workflow that spans multiple tools and consumes meaningful time, such as meeting debrief to actions or RFP assembly.

- Confirm that your context layer is ready (your data is ready for use)

- Prototype with an orchestration framework and MCP where possible to reduce the need for “custom glue”.

- Measure time saved, quality of outcomes, and user satisfaction, etc. then decide whether to expand scope.

- Read the AI Literacy whitepaper for foundational concepts, and look out for an upcoming post on agent governance and risk.

References

- Gartner. “Emerging Tech: Autonomous Agents Will Orchestrate 30% of Enterprise Knowledge Work by 2026.” April 2025.

- OpenAI. (2025). GPT-5: OpenAI’s latest multimodal model.

- Reuters. “OpenAI launches new developer tools as Chinese AI startups gain ground”. March 2025.