Virtual Service Endpoints is a new feature for securing Azure Services (like Storage and Azure SQL Database) to your VNET. In other words, traffic to these services remains limited to the Azure backbone. Before the advent of this feature, clients could access these services via the Internet (as long as they were part of the IP address range specified using the firewall rules).

However, Virtual Service Endpoints is only available to resources connected to Resource Manager VNETs. This means that classic compute resources like VMs and Cloud Services cannot take advantage of these features out of the box. This blog post describes an approach to overcome this limitation.

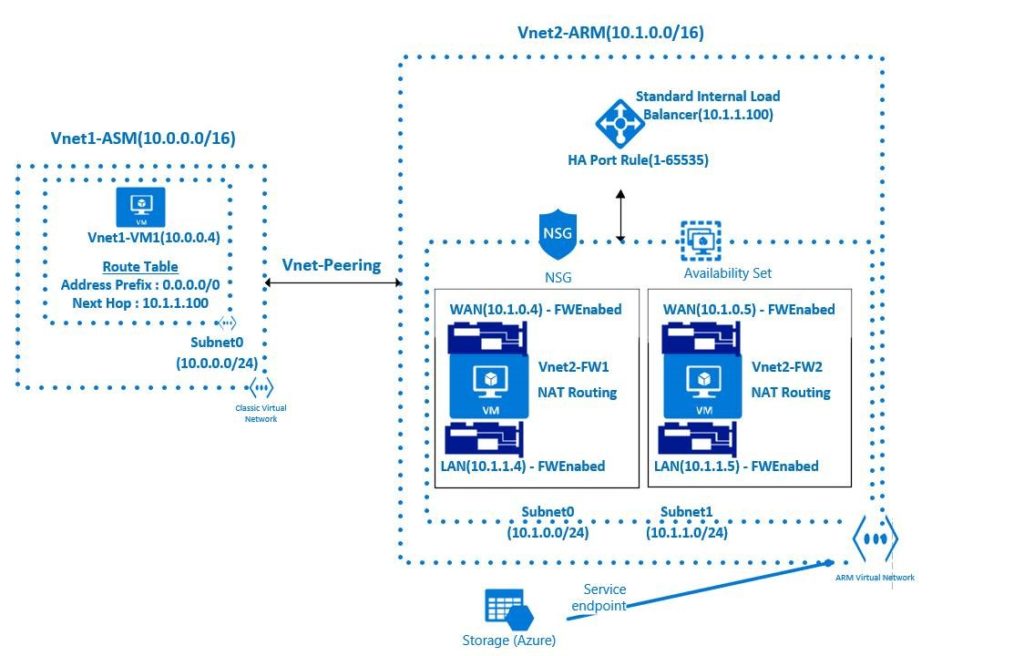

As shown in the diagram below, we have a classic VM (Vnet1-VM1) connected to a classic VNET (Vnet1-ASM). We want to take advantage of Virtual Service Endpoints for Storage. More specifically, we want a program executing on Vnet1-VM1 to access the storage in a manner that the traffic is completely limited to the Azure backbone.

The first step is to create a Resource Manager VNET (Vnet2-ARM in the diagram above). Vnet2-ARM is broken into two subnets: Subnet0 and Subnet1

Next we will add a Virtual Service Endpoint-enabled Storage to Vnet2-ARM, as shown in the bottom part of the diagram.

Then we will peer the Vnet1-ASM and Vnet2-ARM VNETs. Peering makes the two VNETs visible to each other. Once peered, these VNETs willessentially appear as one VNET (from a connectivity perspective). At this point we should be able to run our program on Vnet1-VM1 and access the storage…correct?

The answer is actually NO. We need to perform the additional step of a setting up a forwarder connected to Vnet2-ARM for the Service Endpoints to work. The forwarder stamps the packets in a manner that a Service Endpoint-enabled service like Azure Storage is able to accept incoming requests.

For the purpose of this blog we will simply use a Linux VM with two NIC cards as a forwarder. The default NIC is connected to Subnet0 and the second NIC is connected to Subnet1. We will also need add a route to the route table that sets our “forwarder” as the next hop. Finally, we will need to set up IP forwarding so our VM can act as a router.

At this point, we can access Virtual Endpoint Storage from our classic VM.

However, our setup is still not optimal for two reasons:

- We have created a single point of failure.

- Since the forwarder is a VM, we are still responsible for patching it, etc. So, even though our Cloud Services-based solution is fully managed, our forwarder is not.

Let’s try to address these two limitations.

The patching requirement is simple to handle. We can simply add a OS Patching extension to our forwarder. This extension can help us configure automated patching (so we avoid patching during peak times).

To address the single point of failure, we will have two VMs in an Availability Set. We can then use the HA Ports capability of Azure Load Balancer to direct the traffic to the health instance of the forwarder based on health probes. This way, we avoid the “single point of failure” limitation of our forwarder.

Summary

By peering classic and Resource Manager VNETs, then adding a “forwarder” appliance in the form of two VMs inside an Availability Set with HA Ports, classic compute can now benefit from the Virtual Network Service Endpoints feature in a resilient and largely self-managing manner.

I would like to thank my colleague Mangat Ram who helped build out and test this solution.