Part one of this series focused on several methods and patterns to make the most out of your terraform code, explicitly focusing on keeping your terraform code and configurations organized and easy to maintain.

Utilizing registries to modularize your infrastructure is only a small part of the improvements you can make to your Terraform code.

One of the significant concepts of Terraform is how it tracks the state of your infrastructure with a state file. In Terraform, you need to define a “remote state” for each grouping of infrastructure you are trying to deploy/provision. This remote state could be stored in an S3 bucket, Azure Storage account, Terraform Cloud, or another applicable service. This is how Terraform knows where to track the state of your infrastructure to determine if any changes need to be applied or if the configuration has drifted away from the baseline defined in your source code. The organization of your state files is essential, especially if you are managing it yourself and not using Terraform Cloud to perform your infrastructure runs.

Keep in mind that as your development teams and applications grow, you will frequently need to manage multiple developments, testing, quality assurance, and production environments. Several projects/components will need to be managed simultaneously, as well as the number of configurations, variable files, CLI arguments, and provider information will become untenable over time.

How do you maintain all this infrastructure reliably? Do you track all the applications for one tier in one state file? Or do you break them up and track them separately?

How do you efficiently share variables across environments and applications without defining them multiple times? How do you successfully apply these terraform projects in a continuous deployment pipeline that is consistent and repeatable for different types of Terraform projects?

At one of our current clients, we were involved with onboarding Terraform as an Infrastructure as Code (IaC) tool. However, we ran into many challenges when trying to deploy multiple tiers (dev, test, stage, production, etc.) across several workstreams, specifically in a continuous manner within a deployment pipeline.

BLOG POST

Learn how to automate a file transfer from FTP server to AWS S3 bucket using Terraform to provide you flexible means when downloading files from an FTP server.

The client I work for has the following requirements for the web UI portion of the services they offer (consider Azure Cloud provider for context):

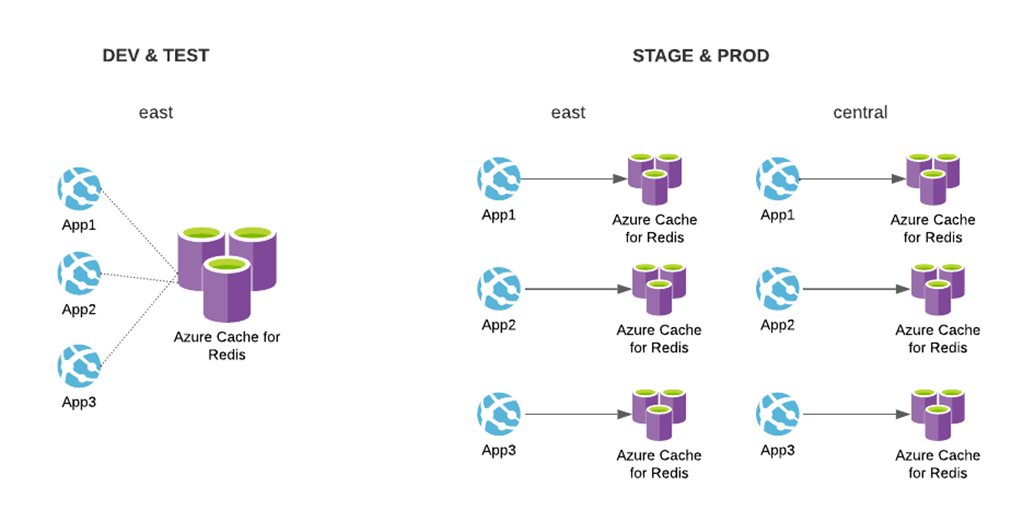

- Each tier has *six applications* for different areas of the United States

- *Each application* has a web server, a redis cache, app service plan, a storage account, and a key vault access policy to access a central key store

- Development and test tiers are deployed to a single region

- Applications in development and test tier both share a redis cache

- Applications in Staging and production environments have individual redis caches

- Stage and production environments are deployed to two regions, east and central

Stage and Production tiers have up to 48 resources, respectively; the diagram above only represents three applications and excludes some services. Our client also had several other IT services that needed similar architectural setups; most projects involved deploying six application instances (for each service area of the United States), each configured differently through application settings.

Initially, our team decided to use the Terraform CLI and track our state files using an Azure Storage Account. Within the application repository, we would store several backend.tf files alongside our terraform code for each tier and pass them dynamically to terraform init –backend-config=<PATH> when we want to initialize a specific environment. We also passed variable files to terraform [plan|apply|destroy] –var-file=<PATH> to combine common and tier-specific application setting template files. We adopted this process in our continuous deployment pipeline by ensuring the necessary authentication principals and terraform CLI packages were available on our deployment agents and then running the appropriate constructed terraform command in the appropriate directory.

This is great but presented a few problems when scaling our process. The process we used initially allowed developers to create their own terraform modules specific to their application, utilizing either local or shared modules in our private registry. One of the significant problems came when trying to apply these modules in a continuous integration pipeline. Each project had its unique terraform project and its configurations that our constant deployment pipeline needed to adhere to.

Naming Conventions

Let’s also consider something relatively simple, like the naming convention of all the resources in a particular project. Usually, you would want the same named prefix on your resources (or apply it as a resource tag) to visually identify what projects the resources belong to. Since we had to maintain multiple tiers of environments for these projects (dev, test, stage, prod), we wanted to share this variable across environments, only needing to declare it once. We also wanted to declare other variables and optionally override them in specific environments. With the Terraform CLI, there is no way to merge inputs and share variables across environments. In addition, you cannot use expressions, functions, or variables in the terraform remote state configuration blocks, forcing you to either hardcode your configuration or apply it dynamically through the CLI; see this issue.

We began to wonder if there was a better way to organize ourselves. This is where Terragrunt comes into play. Instead of keeping track of the various terraform commands, remote state configurations, variable files, and input parameters we needed to consolidate to provision our terraform projects, what if we had a declarative way of defining the configuration of our Terraform?

Terragrunt is a minimal wrapper around Terraform that allows you to dynamically assign and inherit remote state configurations, provider information, local variables, and module inputs for your terraform projects through a hierarchal folder structure with declarative configuration files. It also gives you a flexible and unopinionated way of consolidating everything Terraform does before it runs a command. Every option you pass to terraform can be specifically configured through a configuration file that inherits, merges, or overrides components from other higher-level configuration files.

Terragrunt allowed us to do these essential things:

- Define a configuration file to tell what remote state file to save based on application/tier (using folder structure)

- It allowed us to run multiple terraform projects at once with a single command

- Pass outputs from one terraform project to another using a dependency chain.

- Define a configuration file that tells terraform what application setting template file to apply to an application; we used .tpl files to

- apply application settings to our Azure compute resources.

- Define a configuration file that tells terraform what variable files to include in your terraform commands

- It allowed us to merge-common input variables with tier-specific input variables with the desired precedence.

- It allowed us to consistently name and create state files.