Earlier this year AIS had the opportunity to complete a research project using HoloLens, Microsoft’s mixed reality development platform. Exploring the Microsoft HoloLens was a fascinating experience, and for me, quite a novel one as well. I’ve admittedly never become caught up in any virtual or augmented reality experiences, or even played any first-person perspective video games. (I have an Xbox One console in my living room, dutifully serving as a glorified Blu-ray player and online video streamer.) In fact, one of the reasons I went with an Xbox was a desire to develop and deploy apps to it, once Microsoft delivered on its promise of unifying all of its platforms.

Earlier this year AIS had the opportunity to complete a research project using HoloLens, Microsoft’s mixed reality development platform. Exploring the Microsoft HoloLens was a fascinating experience, and for me, quite a novel one as well. I’ve admittedly never become caught up in any virtual or augmented reality experiences, or even played any first-person perspective video games. (I have an Xbox One console in my living room, dutifully serving as a glorified Blu-ray player and online video streamer.) In fact, one of the reasons I went with an Xbox was a desire to develop and deploy apps to it, once Microsoft delivered on its promise of unifying all of its platforms.

So lacking a firsthand appreciation of immersive computer-generated experiences, having the ability to see and interact with computer-generated objects inside my real physical space was quite engaging.

The Promise of Augmented Reality

Naturally, as a developer, I began to re-imagine the most recent solutions on which I’d worked as having some holographic component to them. Many of these enterprise projects (most of them, actually) have no natural fit to this technology. But for one — with a user base of insurance adjusters out in the field inspecting damage to property — there did seem to be an opportunity to recognize the type and extent of damage, and to overlay data about policies, claims, and resolutions to similar scenarios from past claims.

I quickly became anxious to develop something that could recognize real-world objects and be able to reason something about them and display relevant data points floating in space, anchored to that object.

Getting Started

Immediately, I set out to start building my own objects and to add contextual information to real-world objects recognized in the physical world. There were tutorials and online samples to help me get started.

The starting point is to have the right workstation set up, and that begins with running Windows 10. The holographic applications that can be deployed to the HoloLens are Universal App Platform (UAP) applications that run on the single, unified Windows Core that underpins Windows 10 for the desktop and mobile devices, the Xbox console, and, of course, the new HoloLens.

I had Visual Studio 2015 as part of my newly upgraded development environment, but to satisfy the HoloLens SDK prerequisite requirements, I had to upgrade it to be running the latest Update 3. This turned out to be a critical step, and one which I ended up needing to repeat in order for everything to begin working correctly.

But Visual Studio is not the only IDE needed for HoloLens development. Unity, the cross-platform game engine, provides the development environment for the three-dimensional holographic objects that are part of a HoloLens UAP application. Game developers familiar with Unity will certainly be able to hit the ground running faster rather than an enterprise Web and mobile application developer like myself, more familiar with environments like Visual Studio, Android Studio, and lightweight code editors such as Atom and VS Code.

Ready, Set, Code!

Unity presented me with a dizzying array of assets and settings and scripts to control them. Scripts are actually C# code that needs to be compiled, and this gets handled inside Visual Studio after Unity performs a build step to generate all the code files for a complete Visual Studio solution. In fact, at least two solutions are created for it seems that all of the scripted components are housed together inside their own separate solution from the main scene.

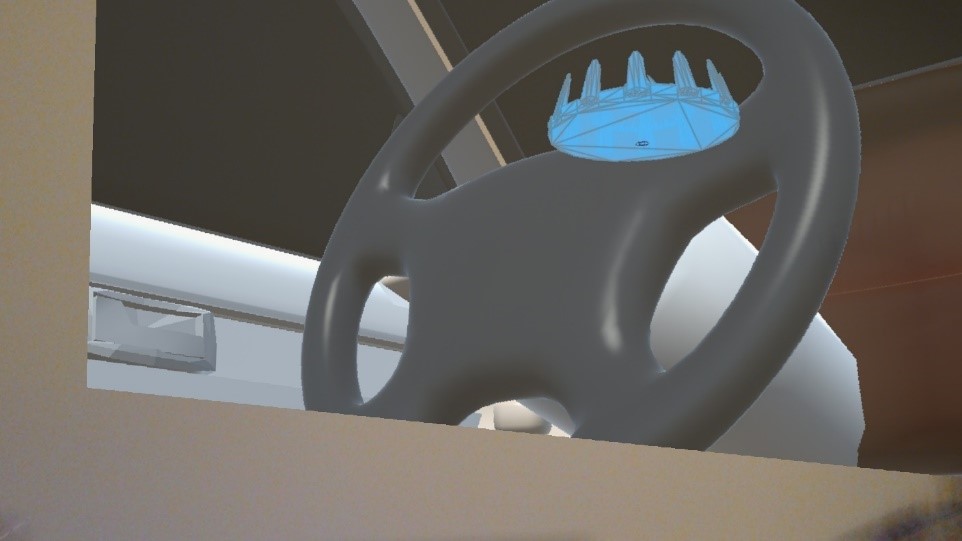

Mirroring the steps from a Mixed Reality Academy tutorial I was indeed able to make a holographic application using a rendering of a large 3D object (an automobile) that I sourced from the website of a community dedicated to the sharing of such assets. But I soon found it challenging to determine the best settings for scale, perspective, and placement when adjusting values from a virtual object that was roughly the size of a basketball.

Reality Sets In

I felt lost in the myriad of settings and scripts and controls, and did not figuratively feel as though I was in the driver’s seat until my first test deployment when my view had me feeling very small and literally in the driver’s seat of my 3D car. In the photo I captured, the original tutorial’s ball-sized object was still suspended in space from its last placement of running the unaltered application.

I also wanted to see if the HoloLens could recognize real-world objects, mapping their surfaces to produce holographic assets that could then be shared. Unfortunately, the HoloStudio app for the HoloLens that seemed to be the simplest way to first enable me to do this would not run on my device. Indeed, a number of my subsequent edits to my oversized car’s scale and placement also resulted in deployed apps that would constantly crash and automatically restart. The sensitivity of the HoloLens device to the apps it runs does not lend itself well to a novice’s trial and error approach to getting things right, I quickly discovered.

The Right Approach

What I think makes the most sense for developing a genuinely useful enterprise application for the HoloLens is to leverage the experience of seasoned game developers who are well-versed in using Unity, and perhaps even DirectX 3D programming. It seems to me that the learning curve here is much too steep, unless the HoloLens platform captures enough interest to support one’s full-time investment into it as its own development platform.

For example, a typical .NET stack developer with C# and JavaScript skills could be best utilized in connecting to APIs for data retrieval and storage, and remotely maintaining state to synchronize scenes between HoloLens devices. The value here would be in leveraging experience in marshaling business data between data sources and views the user can see and manipulate.

Many of the work patterns already established for Web and mobile development, whereby server-side and UI development are separated and hand-over points and data contracts are defined, have been largely retired as full-stack developers can expertly code on both sides of the divide. These patterns could be pressed back into service for a HoloLens project where a team of skilled engineers focus on the different domains to develop a complete, manageable solution that is compelling in both its augmented reality experience and its ability to deliver real business value.