In this article, I am going to explain Distributed Resource Scheduler (DRS) in detail. First, we’ll cover what it is, how it works in the backend, and our options in DRS settings. DRS is not to balance the load perfectly across every host. Rather, DRS monitors the resource demand and ensures that every Virtual Machine (VM) is entitled to the resources. When DRS determines that a better host exists for the VM, it recommends moving that VM.

Two Primary functions of DRS are:

- Load balancing VMs due to imbalanced cluster

- VM placement when Powering on.

Let’s take a closer look at how DRS achieves its goal of ensuring VMs are happy, with effective placement and efficient load balancing.

Effective VM Placement

One of the first steps in ensuring good VM performance is to ensure that the VM gets all the resources it needs as soon as it is powered on. DRS considers the demand of a VM, so it will never be short of resources whenever it is started. A VM’s demand includes the number of resources it needs to run, and the way DRS calculates this is described below:

DRS looks for the demand for every running VM in the cluster. VM demand is the number of resources that the VM currently needs to run. For CPU, demand is calculated based on the amount of CPU the VM is currently consuming. For memory, demand is calculated based on the following formula.

VM memory demand = Function(Active memory used, Swapped, Shared) + 25% (idle consumed memory)

Efficient Load Balancing

DRS uses a cluster-level balance metric to make load-balancing decisions. This balance metric is calculated from the standard deviation of resource utilization data from hosts in the cluster. DRS runs its algorithm once every 5 minutes (by default) to study imbalance in the cluster. If it needs to balance the load in each round, DRS uses VMotion to migrate running VMs from one ESXi host to another.

Detecting VM Demand Changes

During each round, along with resource usage data, DRS collects resource availability data from every VM and host in the cluster. Data like VM CPU average and VM CPU max over the last collection interval depict the resource usage trend for a given VM. DRS then correlates the resource usage data with the available data and runs its load balancing algorithm before taking necessary VMotion actions to keep the cluster balanced and ensure that VMs are always getting the resources they need to run.

Cost-Benefit Analysis

VMotion of live VMs comes with a performance cost, which depends on the size of the VM being migrated. If the VM is large, it will use many of the current host’s and target host’s CPU and memory for VMotion. The benefit, however, is in terms of performance for VMs on the source host, the migrated VM on the destination host, and improved load balance across the cluster. Therefore, the DRS algorithm constantly evaluates the cost and benefit of each load balancing VMotion move.

Factors that Affect DRS Behavior

DRS Automation Levels

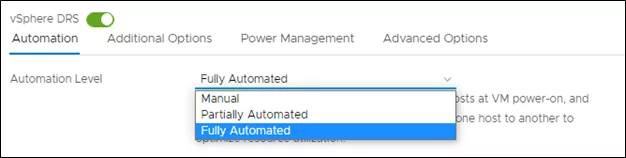

During initial placement and load balancing, DRS generates placement and VMotion recommendations, respectively. DRS can apply these recommendations automatically, or you can use them manually. DRS has three levels of automation:

- Fully Automated – DRS applies both initial placements and load balancing recommendations automatically.

- Partially Automated – DRS applies recommendations only for initial placement.

- Manual – You must apply both initial placement and load balancing recommendations.

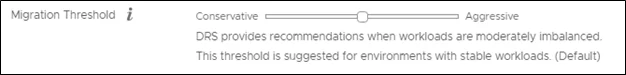

DRS Aggression Levels (Migration Threshold)

The DRS aggression level controls the amount of imbalance that is tolerated in the cluster. DRS has five aggression levels ranging between 1 (most conservative) and 5 (most aggressive). The more aggressive the level, the less DRS tolerates imbalance in the cluster. Conversely, the more traditional, the more DRS accepts imbalance. As a result, you might see DRS initiate more migrations and generate a more even load distribution when increasing the aggression level. By default, the DRS aggression level is set to 3.

When the DRS aggression is set to level 1, DRS will not load balance the VMs. Instead, DRS will only apply move recommendations that must be taken either to satisfy hard constraints, such as affinity or anti-affinity rules or to evacuate VMs from a host entering maintenance or standby mode.

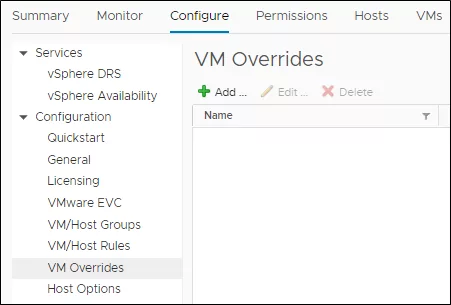

VM Overrides

DRS automation levels and migration threshold are normally applied at the cluster level. In some cases, you might require DRS to treat some VMs especially. For example, you might decide DRS should not consider a specific VM when generating its recommendations, or you might decide DRS should not migrate that VM at all. You can set VM overrides under Cluster -> Manage -> Settings -> VM Overrides. Here you can set the automation or migration threshold for a VM to a value different than that at the cluster level or even disable them.

VM/Host Rules

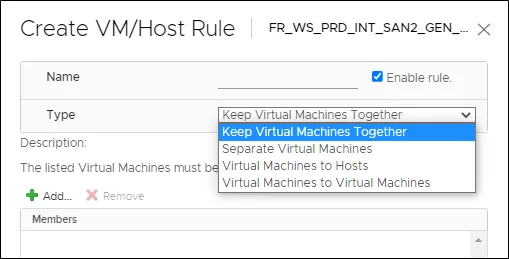

Rules help define special conditions on VMs and hosts in a DRS cluster. Once a rule is set, DRS has to honor it and make recommendations by the rule, along with its placement and load balancing logic.

There are different types of rules that can be set:

- Keep Virtual Machines Together (VM-VM)—This rule ensures that the VMs specified in the rule are always running on the same host.

- Separate Virtual Machines (VM-VM)—This rule will keep the VMs always running on different hosts.

- Virtual Machines to Hosts (VM-Host)—This type of rule is set on groups of one or more VMs and one or more hosts.

- A host or a VM group can be created in the web client, under VM overrides under Cluster -> Manage -> Settings -> VM/Host Groups.

In VM-Host rules, there are sub-rules of type should and must. With these sub-rules, you can specify if a VM group should/must, or should not/must not run on a host group. Sub-rules of type must (mandatory) will always be honored by DRS under all circumstances. However, sub-rules of type should (preferential) are dropped if DRS determines that the imbalance in the Cluster is very high.

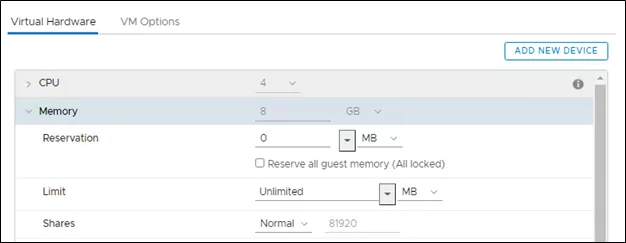

Reservation, Limit, and Shares

DRS provides many tools for you to customize your VMs and workloads according to specific use cases. Reservation, limit, and shares are three such tools borrowed from ESXi’s resource management paradigm.

Reservation

You might need to guarantee to compute resources to some critical VMs in your clusters. This is often the case when running applications that cannot tolerate any resource shortage or when running an application that is always expected to be up and serving requests from other parts of the infrastructure. With the help of reservations, you can guarantee a specified amount of CPU or memory to your critical VMs. Reservations can be made for an individual VM or at the resource pool level. For example, in a resource pool with several VMs, a reservation guarantees resources collectively for all the VMs in the pool.

Limit

In some cases, you might want to limit the resource usage of some VMs in their cluster to prevent them from consuming resources from other VMs in the cluster. This can be useful, for example, when you want to ensure that when the load spikes in a non-critical VM, it does not end up consuming all the resources and thereby starving other critical VMs in the cluster.

Shares

Shares provide you a way to prioritize resources for VMs when there is competition in the cluster. They can be set at a VM or a resource pool level. By default, a cluster has a resource pool hierarchy, with the root resource pool (the cluster itself) is at the top, and all VMs are its children. Shares are defined as numbers for all the sibling VMs under this root resource pool. Shares are distributed equally, by default, on a per-resource basis (per-vCPU and per-unit of memory). This means that by default, a VM with more configured resources will get more shares than a VM with fewer resources. Resources available at the root resource pool are shared among the children during resource contention based on their shares’ values.

DRS provides four types of shares for VMs and resource pools – Low, Normal, High, and Custom – to change their priority compared to their siblings. Normal shares are typically 2x Low, and High shares are typically 2x Normal. Custom can be used to set specific share values. When setting custom shares at a VM level, you need to account for all the vCPUs and memory of that VM since shares are assigned based on the amount of configured resources of a VM.

Disclaimer

Thanks for visiting this blog. Everything I shared here is based on my knowledge and experience. Your environment may differ from what’s mine. Please be conscious before executing anything in a production environment.