Part 2: Load testing the RPC-based integration style

In Part 1 of this series I created a fictitious distributed enterprise system that allowed an inventory application to communicate with a purchasing application through an RPC integration style. In this post, I am going to give this distributed system a stress test, see how it fails and examine the consequences and severity of such a failure. In part 3 and 4 of the series I’ll take a different integration approach and update the system to integrate using a messaging style over the RabbitMQ messaging technology.

The test environment

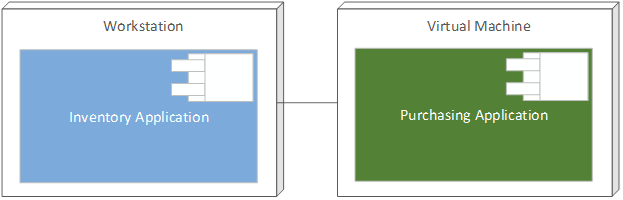

As a reminder, this is how my distributed system is deployed:

I have the inventory application running on my local machine and the purchasing application running on a virtual machine in Hyper-V. I have configured my virtual machine with 2GB of RAM and a single 3GHz processor. This limited power should allow me to stress the system to its limit. The purchasing system is an ASP.NET Web API project hosted in IIS.

Designing the test

In order to put this distributed system under stress, I will need to create a spike test that will simulate a temporary increase in the number of requests executed by the inventory application against the purchasing application. A great way to do this is to utilize Visual Studio’s Load Test feature and have the load test cause the inventory application to fire off a few hundred requests against the purchasing application.

Visual Studio’s Load Test feature offers us a nice set of options for load testing a system. One of these options is to have the load test execute a preconfigured integration test a set number of times in rapid succession. This is exactly what we need to simulate a spike so let’s go ahead and create an integration test that the load test can use.

To do this, I’ll add a new test project to the solution (called Enterprise.Inventory.Test) and create a new TestMethod that I can use to test the inventory application’s StockManager.RequestStockReplenishment method:

[TestClass]

public class IntegrationTests

{

[TestMethod]

public void RequestStockReplenishmentTest()

{

var stockManager = new StockManager();

// Test that no exception is thrown.

stockManager.RequestStockReplenishment(

itemCode: Guid.NewGuid().ToString());

}

}

Running this integration test shows that it completes successfully and, upon completion, I can open up the PurchaseOrders.txt file on the virtual machine and see a line something like this:

Purchase order for item: 09e40502-8be8-496f-9819-f6ec486c9ea0

This shows me that the inventory application, purchasing application and integration test are all working as they should. We can now create a Visual Studio Load Test to execute the integration test a few hundred times and see how the system handles the spike.

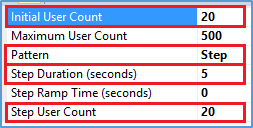

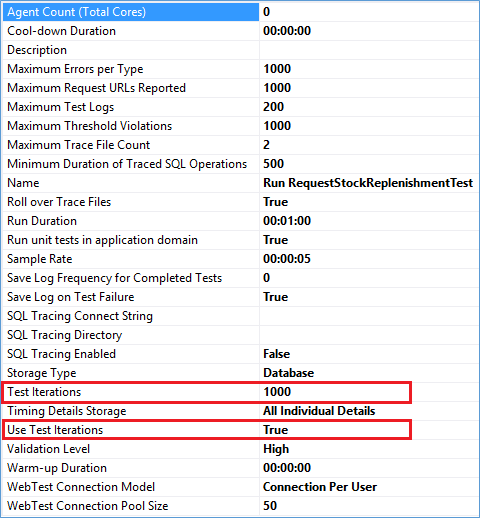

Visual Studio’s Load Test project has an execution pattern called the Step Load Pattern which allows me to start the load test by executing the integration test a certain number of times and then gradually increase that number at set intervals. As each test completes, it immediately starts another test to take its place. I am going to configure my load test to start with 20 simultaneous test runs and then increase that number by 20 every 5 seconds. My load test will run until it has completed a total of 1,000 integration tests.

Executing the Test

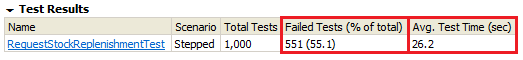

Executing the load test immediately reveals problems with my current architecture. Out of 1,000 test runs, 551 failed to execute successfully. Not only that, but each integration test took on average 26.2 seconds to complete. That is hardly an acceptable response time.

Why did my tests fail? Digging deeper into the test error logs reveals that my requests were timing out. I had configured my requests to timeout after 30 seconds and many of the responses were taking longer than that to return.

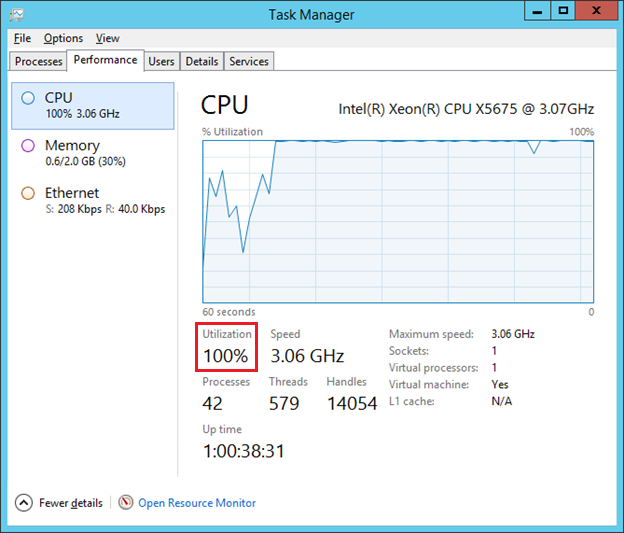

Taking a look at my virtual machine Task Manager reveals another troubling situation. My CPU was maxing out; running at 100% utilization for most of the test run.

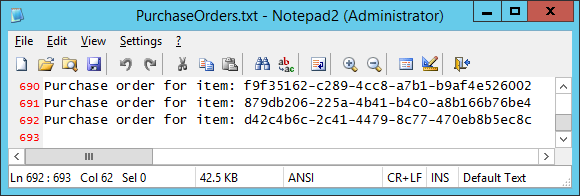

Even more troubling than this is the fact that I have no idea which of the failed requests from the inventory application resulted in a successful purchase order from the purchasing application. The request timed out before I could determine whether or not it succeeded. Opening up the PurchaseOrders.txt file (the file that records successful purchase orders) reveals exactly this problem. The load test caused the inventory application to execute 1,000 purchase order requests against the purchasing application. 551 of them timed out. One might think this means 449 of them succeeded. But viewing the contents of the PurchaseOrders.txt file shows that it actually contains 692 successful purchase orders, not 449:

Some of our timed out requests succeeded and some of them failed and we have no way of knowing which ones failed. If we did know which ones failed we could resubmit them. But as it stands, resubmitting the timed out requests could easily result in duplicate purchase orders. This is not a great situation to be in. How do we solve this problem?

First attempt at a solution

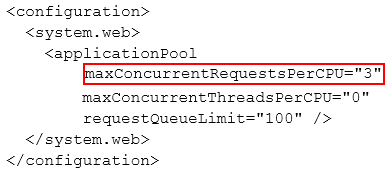

With our current architecture we don’t have too many options available to us. One thing that we can try to do is to prevent the virtual machine CPU utilization from maxing out. We have already seen how this is causing unacceptable response times and resulting in request timeouts. We can achieve this by editing the Aspnet.config file and throttling number of simultaneous requests allowed by ASP.NET. I am going to set the maxConcurrentRequestsPerCPU setting to 3, effectively limiting ASP.NET to processing only 3 requests at a time:

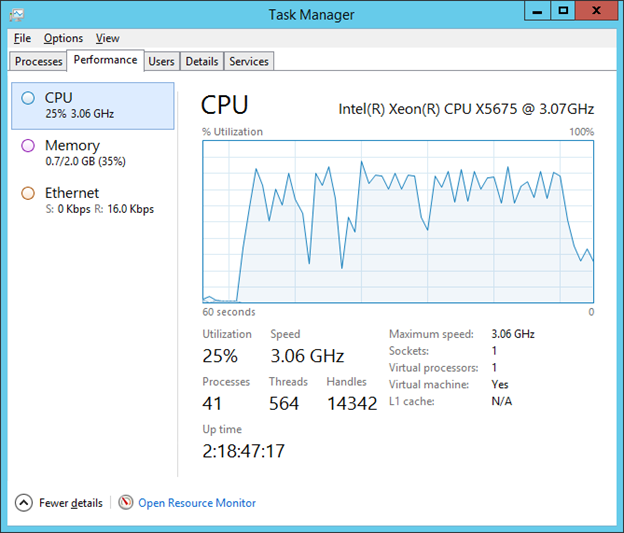

Rerunning the load test now shows the virtual machine CPU usage is indeed under control:

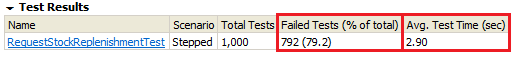

However, most of my tests are still failing:

In my previous test run I was receiving timeout errors. Digging into the error logs this time shows that I am no longer receiving timeout errors but I am instead receiving “503 Service Unavailable” responses. These are exactly the errors I would expect to see after my request queue fills up.

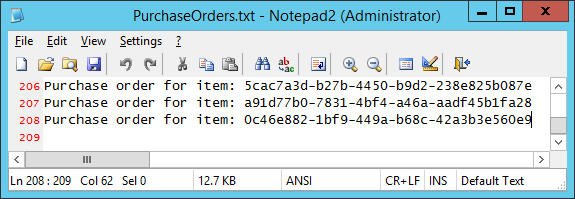

So we have essentially traded timeout errors for “503 Service Unavailable” errors. This is still not great but it is a slightly better situation to be in: Unlike with the timeout errors, none of the requests that received a “503 Service Unavailable” response resulted in a purchase order being placed. With 792 failed tests I would expect to see 208 successful purchase orders. And that is exactly what I see when I open up the PurchaseOrders.txt file on the virtual machine:

The fact that a “503 Service Unavailable” failure doesn’t result in a purchase order means that I could program in some retry logic such that if I receive one of these errors, I’ll just retry few more times and hope one of those retries succeeds. Again, this is not exactly an ideal situation to be in.

Wrap-Up

In Part 1 of this series we looked at creating a distributed system for testing purposes. We wanted to see how a system behaves when it doesn’t have enough resources to handle a spike in load. We created a simple inventory application that made requests to a purchasing application deployed on a Hyper-V virtual machine using an RPC-style architecture. The purchasing application simulated a long running and CPU intensive operation whenever it received a request from the inventory application.

In this post we put the distributed system under stress and saw that the RPC-style architecture couldn’t gracefully handle a spike in load if it didn’t have the immediate resources available. In our first attempt we maxed out the virtual machine CPU and many of the inventory application requests simply timed out. In our second attempt we limited the number of concurrent requests allowed by ASP.Net and saw that we could keep the CPU usage under control but the inventory application received many “503 Service Unavailable” responses from the purchasing application.

In Part 3 of this series we are going to look at an alternative architecture that uses messaging as the underlying integration style. We are going to replace the RPC-style architecture with an architecture that uses RabbitMQ to communicate between the inventory application and the purchasing application. In Part 4 we will run the same load test we have been using all along and compare the results with the results from the previous tests.

You can download all the source code for this series from this GitHub repository.